Disclaimer

The content of the original book series is licensed under the CC0 license, which permits copying, modification, and distribution. The explanations are copied directly and have been annotated with Rust-specific details where appropriate. Any sentences that include words such as I, me, or mine are from the original authors, Peter Shirley, Trevor David Black or Steve Hollasch. Once again, thank you for this outstanding book series.

Overview

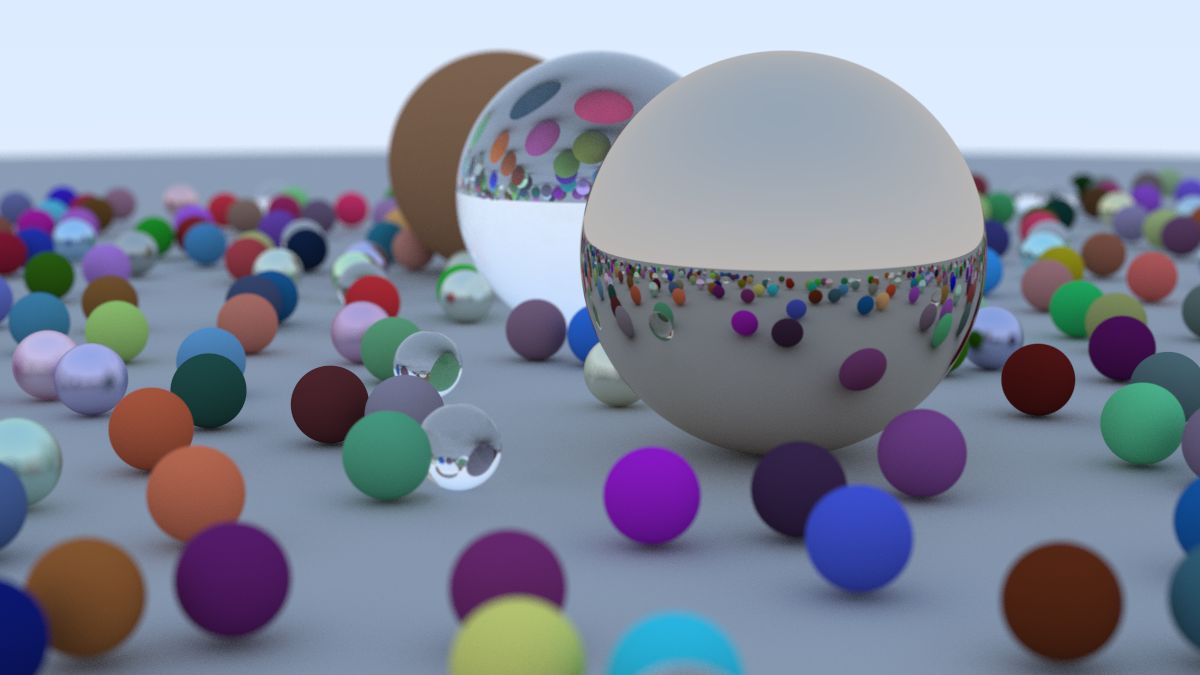

I’ve taught many graphics classes over the years. Often I do them in ray tracing, because you are forced to write all the code, but you can still get cool images with no API. I decided to adapt my course notes into a how-to, to get you to a cool program as quickly as possible. It will not be a full-featured ray tracer, but it does have the indirect lighting which has made ray tracing a staple in movies. Follow these steps, and the architecture of the ray tracer you produce will be good for extending to a more extensive ray tracer if you get excited and want to pursue that.

When somebody says “ray tracing” it could mean many things. What I am going to describe is technically a path tracer, and a fairly general one. While the code will be pretty simple (let the computer do the work!) I think you’ll be very happy with the images you can make.

I’ll take you through writing a ray tracer in the order I do it, along with some debugging tips. By the end, you will have a ray tracer that produces some great images. You should be able to do this in a weekend. If you take longer, don’t worry about it. I use C++ as the driving language, but you don’t need to.1 However, I suggest you do, because it’s fast, portable, and most production movie and video game renderers are written in C++. Note that I avoid most “modern features” of C++, but inheritance and operator overloading are too useful for ray tracers to pass on.2

I do not provide the code online, but the code is real and I show all of it except for a few straightforward operators in the vec3 class. I am a big believer in typing in code to learn it, but when code is available I use it, so I only practice what I preach when the code is not available. So don’t ask! I have left that last part in because it is funny what a 180 I have done. Several readers ended up with subtle errors that were helped when we compared code. So please do type in the code, but you can find the finished source for each book in the RayTracing project on GitHub.

A note on the implementing code for these books — our philosophy for the included code prioritizes the following goals:

- The code should implement the concepts covered in the books.

- We use C++, but as simple as possible. Our programming style is very C-like, but we take advantage of modern features where it makes the code easier to use or understand.

- Our coding style continues the style established from the original books as much as possible, for continuity.

- Line length is kept to 96 characters per line, to keep lines consistent between the codebase and code listings in the books.3

The code thus provides a baseline implementation, with tons of improvements left for the reader to enjoy. There are endless ways one can optimize and modernize the code; we prioritize the simple solution.

We assume a little bit of familiarity with vectors (like dot product and vector addition). If you don’t know that, do a little review. If you need that review, or to learn it for the first time, check out the online Graphics Codex by Morgan McGuire, Fundamentals of Computer Graphics by Steve Marschner and Peter Shirley, or Computer Graphics: Principles and Practice by J.D. Foley and Andy Van Dam.

See the project README file for information about this project, the repository on GitHub, directory structure, building & running, and how to make or reference corrections and contributions.

See our Further Reading wiki page for additional project related resources.

These books have been formatted to print well directly from your browser. We also include PDFs of each book with each release, in the “Assets” section.

If you want to communicate with us, feel free to send us an email at:

- Peter Shirley, ptrshrl@gmail.com

- Steve Hollasch, steve@hollasch.net

- Trevor David Black, trevordblack@trevord.black

Finally, if you run into problems with your implementation, have general questions, or would like to share your own ideas or work, see the GitHub Discussions forum on the GitHub project.

Thanks to everyone who lent a hand on this project. You can find them in the acknowledgments section at the end of this book.

Let’s get on with it!

-

There is where Rust comes into play - a fast, reliable language with better ergonomics. This is an unbiased opinion of course *caugh*. ↩

-

Inheritance is not supported in Rust. In many places simple composition and traits will do the trick. ↩

-

rustfmt will be used instead, as it is shipped with most IDEs. ↩

The PPM Image Format

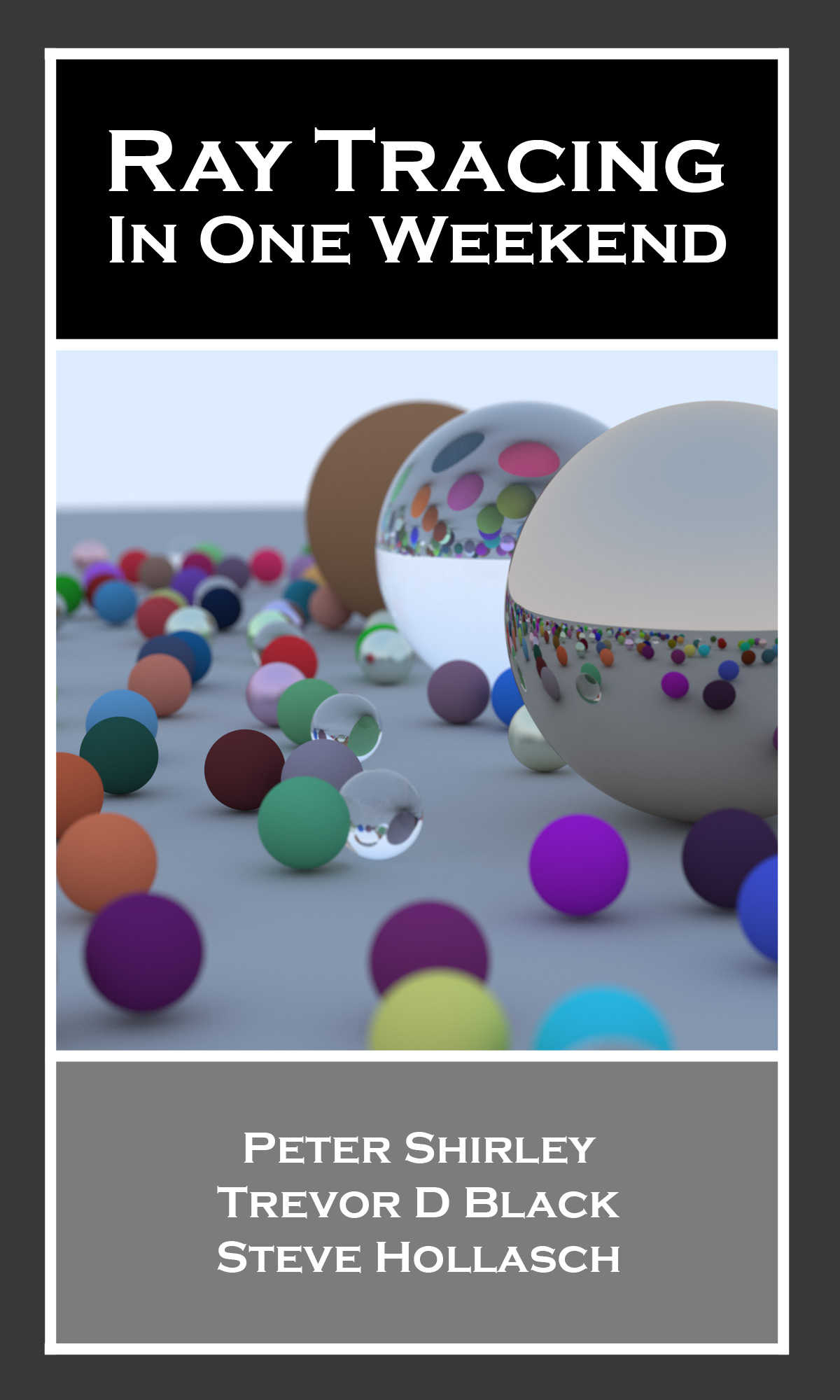

Whenever you start a renderer, you need a way to see an image. The most straightforward way is to write it to a file. The catch is, there are so many formats. Many of those are complex. I always start with a plain text ppm file. Here’s a nice description from Wikipedia:

Figure 1: PPM Example

Let’s make some C++ code to output such a thing:1

fn main() { // Image const IMAGE_WIDTH: u32 = 256; const IMAGE_HEIGHT: u32 = 256; // Render println!("P3"); println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}"); println!("255"); for j in 0..IMAGE_HEIGHT { for i in 0..IMAGE_WIDTH { let r = i as f64 / (IMAGE_WIDTH - 1) as f64; let g = j as f64 / (IMAGE_HEIGHT - 1) as f64; let b = 0.0; let ir = (255.999 * r) as i32; let ig = (255.999 * g) as i32; let ib = (255.999 * b) as i32; println!("{ir} {ig} {ib}"); } } }

Listing 1: [main.rs] Creating your first image

There are some things to note in this code:

- The pixels are written out in rows.

- Every row of pixels is written out left to right.

- These rows are written out from top to bottom.

- By convention, each of the red/green/blue components are represented internally by real-valued variables that range from 0.0 to 1.0. These must be scaled to integer values between 0 and 255 before we print them out.

- Red goes from fully off (black) to fully on (bright red) from left to right, and green goes from fully off at the top (black) to fully on at the bottom (bright green). Adding red and green light together make yellow so we should expect the bottom right corner to be yellow.

-

It is Rust code of course. This won't be annotated anymore. ↩

Creating an Image File

Because the file is written to the standard output stream, you'll need to redirect it to an image file. Typically this is done from the command-line by using the > redirection operator.

On Windows, you'd get the debug build from CMake running this command:1

cargo b

Then run your newly-built program like so:2

cargo r > image.ppm

Later, it will be better to run optimized builds for speed. In that case, you would build like this:

cargo b -r

and would run the optimized program like this:

cargo r -r

The examples above assume that you are building with CMake, using the same approach as the CMakeLists.txt file in the included source. Use whatever build environment (and language) you're most comfortable with.

On Mac or Linux, release build, you would launch the program like this:3

cargo r > image.ppm

Complete building and running instructions can be found in the project README.

Opening the output file (in ToyViewer on my Mac, but try it in your favorite image viewer and Google “ppm viewer” if your viewer doesn’t support it) shows this result:

Image 1: First PPM image

Hooray! This is the graphics “hello world”. If your image doesn’t look like that, open the output file in a text editor and see what it looks like. It should start something like this:

P3

256 256

255

0 0 0

1 0 0

2 0 0

3 0 0

4 0 0

5 0 0

6 0 0

7 0 0

8 0 0

9 0 0

10 0 0

11 0 0

12 0 0

...

Listing 2: First image output

If your PPM file doesn't look like this, then double-check your formatting code. If it does look like this but fails to render, then you may have line-ending differences or something similar that is confusing your image viewer. To help debug this, you can find a file test.ppm in the images directory of the Github project. This should help to ensure that your viewer can handle the PPM format and to use as a comparison against your generated PPM file.

Some readers have reported problems viewing their generated files on Windows. In this case, the problem is often that the PPM is written out as UTF-16, often from PowerShell. If you run into this problem, see Discussion 1114 for help with this issue.

If everything displays correctly, then you're pretty much done with system and IDE issues — everything in the remainder of this series uses this same simple mechanism for generated rendered images.

If you want to produce other image formats, I am a fan of stb_image.h, a header-only image library available on GitHub at https://github.com/nothings/stb.4

-

We are not using CMake; we are relying on the standard cargo build tool. ↩

-

You can skip the building step since it is part of the run command (or

rfor short). ↩ -

It is the same as for Windows. ↩

-

Rust crate alternativ: https://crates.io/crates/stb_image. ↩

Adding a Progress Indicator

Before we continue, let's add a progress indicator to our output. This is a handy way to track the progress of a long render, and also to possibly identify a run that's stalled out due to an infinite loop or other problem.

Our program outputs the image to the standard output stream (std::cout), so leave that alone and instead write to the logging output stream (std::clog):1

diff --git a/src/main.rs b/src/main.rs

index af636bc..00cad27 100644

--- a/src/main.rs

+++ b/src/main.rs

@@ -1,26 +1,29 @@

fn main() {

// Image

const IMAGE_WIDTH: u32 = 256;

const IMAGE_HEIGHT: u32 = 256;

// Render

+ env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

+ log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

let r = i as f64 / (IMAGE_WIDTH - 1) as f64;

let g = j as f64 / (IMAGE_HEIGHT - 1) as f64;

let b = 0.0;

let ir = (255.999 * r) as i32;

let ig = (255.999 * g) as i32;

let ib = (255.999 * b) as i32;

println!("{ir} {ig} {ib}");

}

}

+ log::info!("Done.");

}Listing 3: [main.rs] Main render loop with progress reporting

Now when running, you'll see a running count of the number of scanlines remaining. Hopefully this runs so fast that you don't even see it! Don't worry — you'll have lots of time in the future to watch a slowly updating progress line as we expand our ray tracer.

-

The log crate with the env_logger implementation is a good alternative to

std::clog. RunRUST_LOG=info cargo r > image.ppmfor the log output. ↩

The vec3 Class 1

Almost all graphics programs have some class(es) for storing geometric vectors and colors. In many systems these vectors are 4D (3D position plus a homogeneous coordinate for geometry, or RGB plus an alpha transparency component for colors). For our purposes, three coordinates suffice. We’ll use the same class vec3 for colors, locations, directions, offsets, whatever. Some people don’t like this because it doesn’t prevent you from doing something silly, like subtracting a position from a color. They have a good point, but we’re going to always take the “less code” route when not obviously wrong. In spite of this, we do declare two aliases for vec3: point3 and color. Since these two types are just aliases for vec3, you won't get warnings if you pass a color to a function expecting a point3, and nothing is stopping you from adding a point3 to a color, but it makes the code a little bit easier to read and to understand.

We define the vec3 class in the top half of a new vec3.h header file, and define a set of useful vector utility functions in the bottom half:

use std::{

fmt::Display,

ops::{Add, AddAssign, Div, DivAssign, Index, IndexMut, Mul, MulAssign, Neg, Sub},

};

#[derive(Debug, Default, Clone, Copy)]

pub struct Vec3 {

pub e: [f64; 3],

}

pub type Point3 = Vec3;

impl Vec3 {

pub fn new(e0: f64, e1: f64, e2: f64) -> Self {

Self { e: [e0, e1, e2] }

}

pub fn x(&self) -> f64 {

self.e[0]

}

pub fn y(&self) -> f64 {

self.e[1]

}

pub fn z(&self) -> f64 {

self.e[2]

}

pub fn length(&self) -> f64 {

f64::sqrt(self.length_squared())

}

pub fn length_squared(&self) -> f64 {

self.e[0] * self.e[0] + self.e[1] * self.e[1] + self.e[2] * self.e[2]

}

}

impl Neg for Vec3 {

type Output = Self;

fn neg(self) -> Self::Output {

Self::Output {

e: self.e.map(|e| -e),

}

}

}

impl Index<usize> for Vec3 {

type Output = f64;

fn index(&self, index: usize) -> &Self::Output {

&self.e[index]

}

}

impl IndexMut<usize> for Vec3 {

fn index_mut(&mut self, index: usize) -> &mut Self::Output {

&mut self.e[index]

}

}

impl AddAssign for Vec3 {

fn add_assign(&mut self, rhs: Self) {

self.e[0] += rhs.e[0];

self.e[1] += rhs.e[1];

self.e[2] += rhs.e[2];

}

}

impl MulAssign<f64> for Vec3 {

fn mul_assign(&mut self, rhs: f64) {

self.e[0] *= rhs;

self.e[1] *= rhs;

self.e[2] *= rhs;

}

}

impl DivAssign<f64> for Vec3 {

fn div_assign(&mut self, rhs: f64) {

self.mul_assign(1.0 / rhs);

}

}

impl Display for Vec3 {

fn fmt(&self, f: &mut std::fmt::Formatter<'_>) -> std::fmt::Result {

write!(f, "{} {} {}", self.e[0], self.e[1], self.e[2])

}

}

impl Add for Vec3 {

type Output = Self;

fn add(self, rhs: Self) -> Self::Output {

Self::Output {

e: [

self.e[0] + rhs.e[0],

self.e[1] + rhs.e[1],

self.e[2] + rhs.e[2],

],

}

}

}

impl Sub for Vec3 {

type Output = Self;

fn sub(self, rhs: Self) -> Self::Output {

Self::Output {

e: [

self.e[0] - rhs.e[0],

self.e[1] - rhs.e[1],

self.e[2] - rhs.e[2],

],

}

}

}

impl Mul for Vec3 {

type Output = Self;

fn mul(self, rhs: Self) -> Self::Output {

Self::Output {

e: [

self.e[0] * rhs.e[0],

self.e[1] * rhs.e[1],

self.e[2] * rhs.e[2],

],

}

}

}

impl Mul<f64> for Vec3 {

type Output = Self;

fn mul(self, rhs: f64) -> Self::Output {

Self::Output {

e: [self.e[0] * rhs, self.e[1] * rhs, self.e[2] * rhs],

}

}

}

impl Mul<Vec3> for f64 {

type Output = Vec3;

fn mul(self, rhs: Vec3) -> Self::Output {

rhs.mul(self)

}

}

impl Div<f64> for Vec3 {

type Output = Self;

fn div(self, rhs: f64) -> Self::Output {

self * (1.0 / rhs)

}

}

#[inline]

pub fn dot(u: Vec3, v: Vec3) -> f64 {

u.e[0] * v.e[0] + u.e[1] * v.e[1] + u.e[2] * v.e[2]

}

#[inline]

pub fn cross(u: Vec3, v: Vec3) -> Vec3 {

Vec3::new(

u.e[1] * v.e[2] - u.e[2] * v.e[1],

u.e[2] * v.e[0] - u.e[0] * v.e[2],

u.e[0] * v.e[1] - u.e[1] * v.e[0],

)

}

#[inline]

pub fn unit_vector(v: Vec3) -> Vec3 {

v / v.length()

}Listing 4: [vec3.rs] vec3 definitions and helper functions

We use double here, but some ray tracers use float. double has greater precision and range, but is twice the size compared to float. This increase in size may be important if you're programming in limited memory conditions (such as hardware shaders). Either one is fine — follow your own tastes.

-

There are no classes in Rust. They are replaced with structs. ↩

Color Utility Functions

Using our new vec3 class, we'll create a new color.h header file and define a utility function that writes a single pixel's color out to the standard output stream.

use crate::vec3::Vec3;

pub type Color = Vec3;

pub fn write_color(mut out: impl std::io::Write, pixel_color: Color) -> std::io::Result<()> {

let r = pixel_color.x();

let g = pixel_color.y();

let b = pixel_color.z();

let rbyte = (255.999 * r) as i32;

let gbyte = (255.999 * g) as i32;

let bbyte = (255.999 * b) as i32;

writeln!(out, "{rbyte} {gbyte} {bbyte}")

}Listing 5: [color.rs] color utility functions

Now we can change our main to use both of these:

diff --git a/src/main.rs b/src/main.rs

index 00cad27..bb37ee6 100644

--- a/src/main.rs

+++ b/src/main.rs

@@ -1,29 +1,30 @@

-fn main() {

+use code::color::{Color, write_color};

+

+fn main() -> Result<(), Box<dyn std::error::Error>> {

// Image

const IMAGE_WIDTH: u32 = 256;

const IMAGE_HEIGHT: u32 = 256;

// Render

env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

- let r = i as f64 / (IMAGE_WIDTH - 1) as f64;

- let g = j as f64 / (IMAGE_HEIGHT - 1) as f64;

- let b = 0.0;

-

- let ir = (255.999 * r) as i32;

- let ig = (255.999 * g) as i32;

- let ib = (255.999 * b) as i32;

-

- println!("{ir} {ig} {ib}");

+ let pixel_color = Color::new(

+ i as f64 / (IMAGE_WIDTH - 1) as f64,

+ j as f64 / (IMAGE_HEIGHT - 1) as f64,

+ 0.0,

+ );

+ write_color(std::io::stdout(), pixel_color)?;

}

}

log::info!("Done.");

+

+ Ok(())

}Listing 6: [main.rs)] Final code for the first PPM image

And you should get the exact same picture as before.

The ray Class

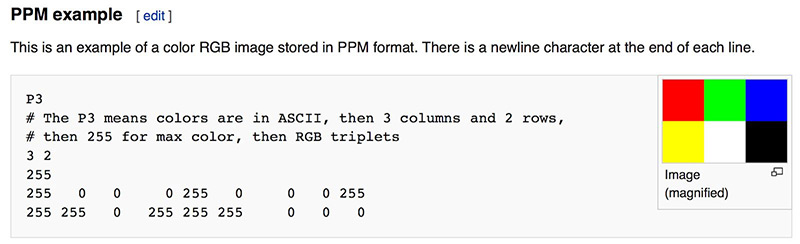

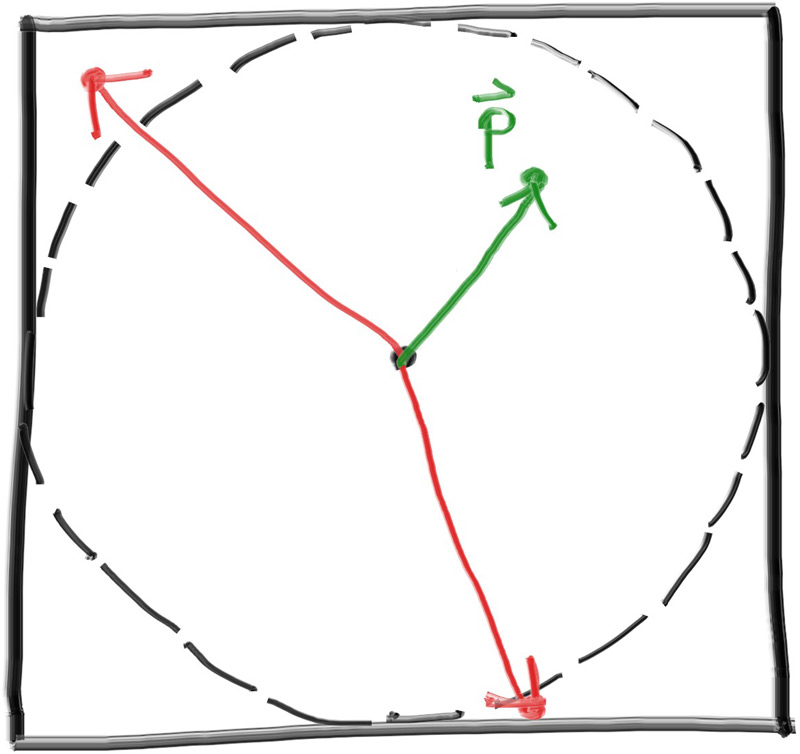

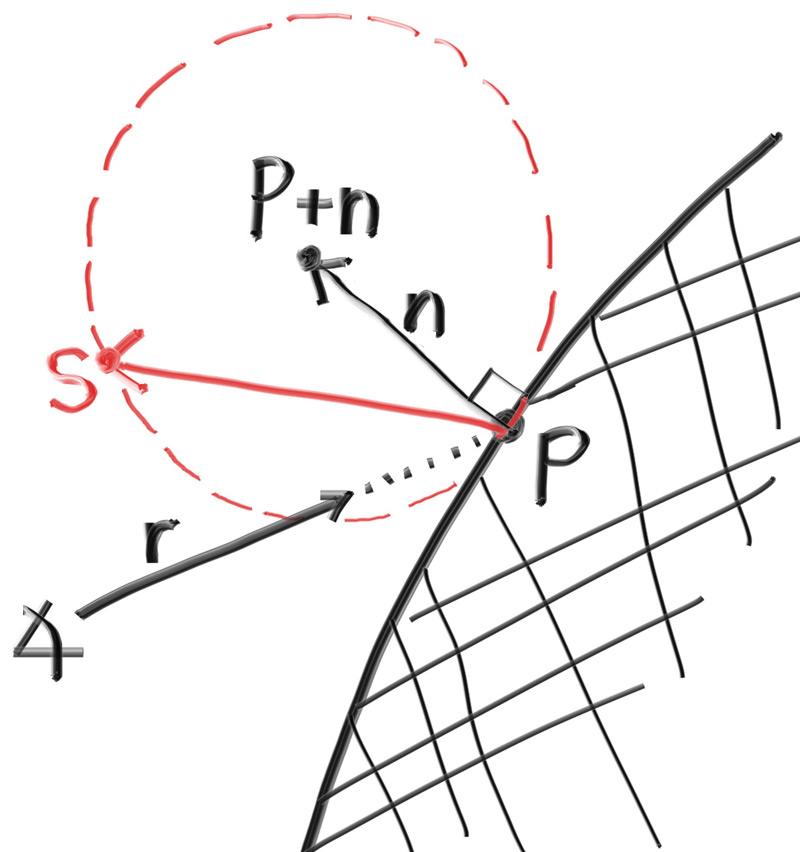

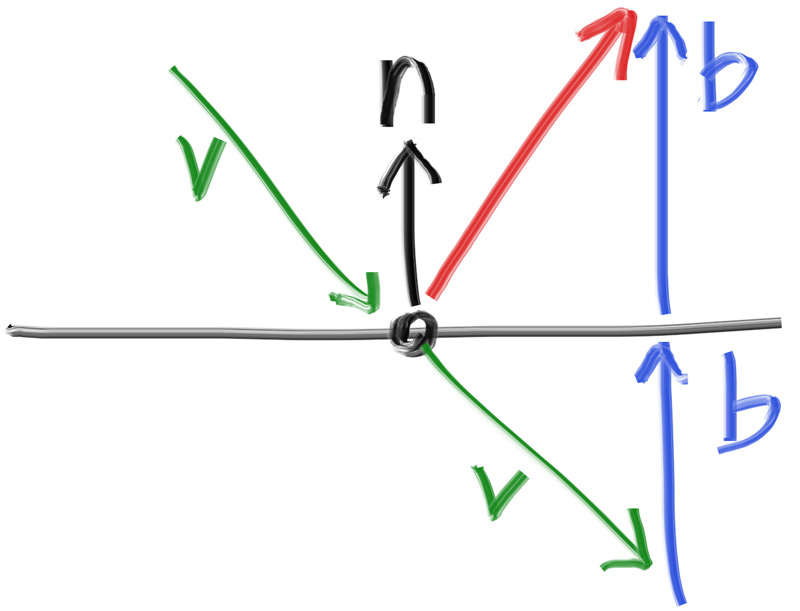

The one thing that all ray tracers have is a ray class and a computation of what color is seen along a ray. Let’s think of a ray as a function \( \mathbf{P} (t) = \mathbf{A} + t \mathbf{b} \). Here \( \mathbf{P} \) is a 3D position along a line in 3D. \( \mathbf{A} \) is the ray origin and \( \mathbf{b} \) is the ray direction. The ray parameter \( t \) is a real number (double in the code). Plug in a different \( t \) and \( \mathbf{P} (t) \) moves the point along the ray. Add in negative \( t \) values and you can go anywhere on the 3D line. For positive \( t \), you get only the parts in front of \( \mathbf{A} \), and this is what is often called a half-line or a ray.

Figure 2: Linear interpolation

We can represent the idea of a ray as a class, and represent the function \( \mathbf{P} (t) \) as a function that we'll call ray::at(t):

use crate::vec3::{Point3, Vec3};

#[derive(Debug, Default, Clone, Copy)]

pub struct Ray {

origin: Point3,

direction: Vec3,

}

impl Ray {

pub fn new(origin: Point3, direction: Vec3) -> Self {

Self { origin, direction }

}

pub fn origin(&self) -> Point3 {

self.origin

}

pub fn direction(&self) -> Vec3 {

self.direction

}

pub fn at(&self, t: f64) -> Point3 {

self.origin + t * self.direction

}

}Listing 7: [ray.rs] The ray class

(For those unfamiliar with C++, the functions ray::origin() and ray::direction() both return an immutable reference to their members. Callers can either just use the reference directly, or make a mutable copy depending on their needs.) 1

-

The careful reader may have noticed that, in the Rust approach, both the

Vec3andRaystructs implement Copy. This method is generally more common than returning a reference and cloning it for mutability when needed. ↩

Sending Rays Into the Scene

Now we are ready to turn the corner and make a ray tracer. At its core, a ray tracer sends rays through pixels and computes the color seen in the direction of those rays. The involved steps are

- Calculate the ray from the “eye” through the pixel,

- Determine which objects the ray intersects, and

- Compute a color for the closest intersection point.

When first developing a ray tracer, I always do a simple camera for getting the code up and running.

I’ve often gotten into trouble using square images for debugging because I transpose 𝑥 and 𝑦 too often, so we’ll use a non-square image. A square image has a 1∶1 aspect ratio, because its width is the same as its height. Since we want a non-square image, we'll choose 16∶9 because it's so common. A 16∶9 aspect ratio means that the ratio of image width to image height is 16∶9. Put another way, given an image with a 16∶9 aspect ratio,

\[ width\,/\,height=16\,/\,9=1.7778 \]

For a practical example, an image 800 pixels wide by 400 pixels high has a 2∶1 aspect ratio.

The image's aspect ratio can be determined from the ratio of its width to its height. However, since we have a given aspect ratio in mind, it's easier to set the image's width and the aspect ratio, and then using this to calculate for its height. This way, we can scale up or down the image by changing the image width, and it won't throw off our desired aspect ratio. We do have to make sure that when we solve for the image height the resulting height is at least 1.

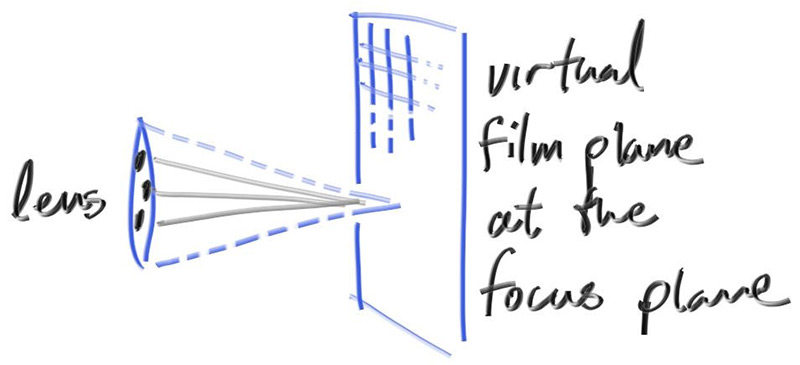

In addition to setting up the pixel dimensions for the rendered image, we also need to set up a virtual viewport through which to pass our scene rays. The viewport is a virtual rectangle in the 3D world that contains the grid of image pixel locations. If pixels are spaced the same distance horizontally as they are vertically, the viewport that bounds them will have the same aspect ratio as the rendered image. The distance between two adjacent pixels is called the pixel spacing, and square pixels is the standard.

To start things off, we'll choose an arbitrary viewport height of 2.0, and scale the viewport width to give us the desired aspect ratio. Here's a snippet of what this code will look like:

use code::color::{Color, write_color};

fn main() -> Result<(), Box<dyn std::error::Error>> {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

// Calculate the image height, and ensure that it's at least 1.

const IMAGE_HEIGHT: i32 = {

let image_height = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

if image_height < 1 { 1 } else { image_height }

};

// Viewport widths less than one are ok since they are real valued.

let viewport_height = 2.0;

let viewport_width = viewport_height * (IMAGE_WIDTH as f64) / (IMAGE_HEIGHT as f64);

// Render

env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

let pixel_color = Color::new(

i as f64 / (IMAGE_WIDTH - 1) as f64,

j as f64 / (IMAGE_HEIGHT - 1) as f64,

0.0,

);

write_color(std::io::stdout(), pixel_color)?;

}

}

log::info!("Done.");

Ok(())

}Listing 8: Rendered image setup

If you're wondering why we don't just use aspect_ratio when computing viewport_width, it's because the value set to aspect_ratio is the ideal ratio, it may not be the actual ratio between image_width and image_height. If image_height was allowed to be real valued—rather than just an integer—then it would be fine to use aspect_ratio. But the actual ratio between image_width and image_height can vary based on two parts of the code. First, image_height is rounded down to the nearest integer, which can increase the ratio. Second, we don't allow image_height to be less than one, which can also change the actual aspect ratio.

Note that aspect_ratio is an ideal ratio, which we approximate as best as possible with the integer-based ratio of image width over image height. In order for our viewport proportions to exactly match our image proportions, we use the calculated image aspect ratio to determine our final viewport width.

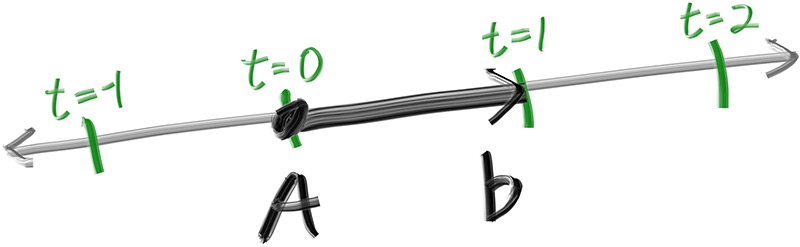

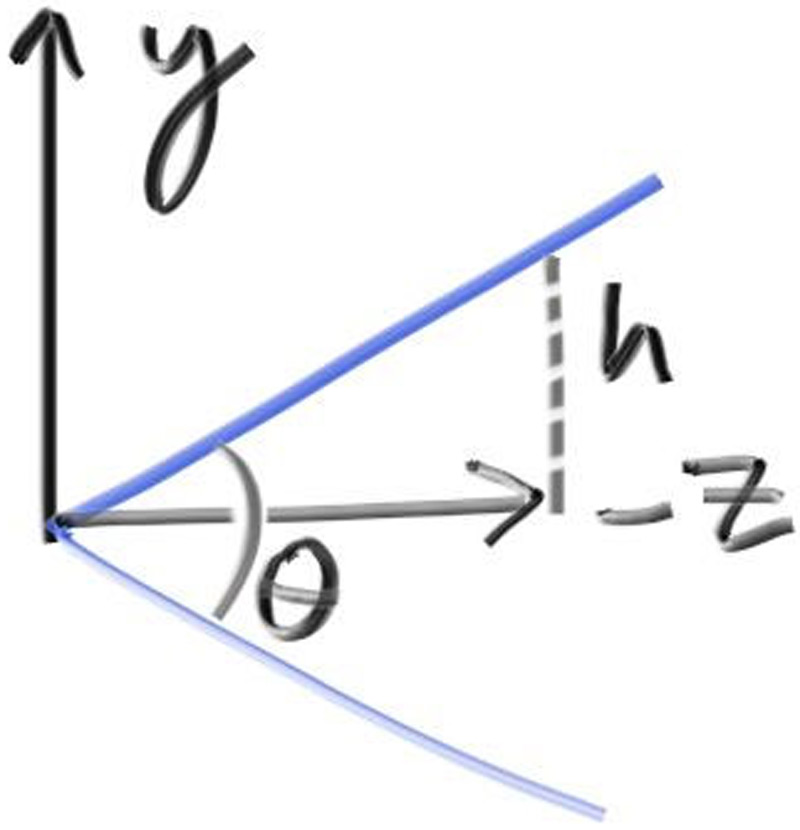

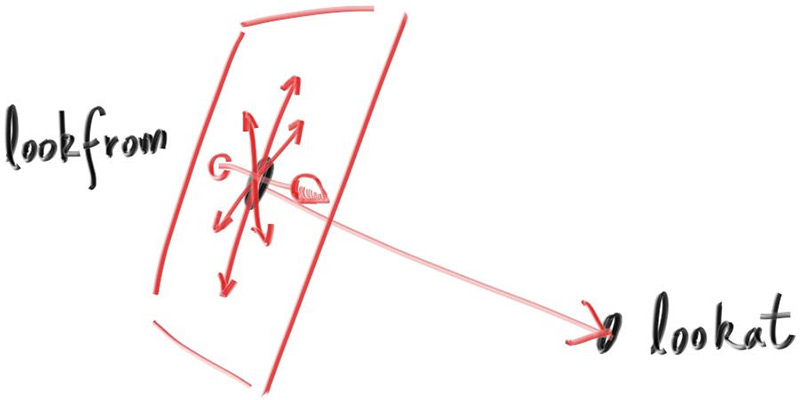

Next we will define the camera center: a point in 3D space from which all scene rays will originate (this is also commonly referred to as the eye point). The vector from the camera center to the viewport center will be orthogonal to the viewport. We'll initially set the distance between the viewport and the camera center point to be one unit. This distance is often referred to as the focal length.

For simplicity we'll start with the camera center at \( (0,0,0) \). We'll also have the y-axis go up, the x-axis to the right, and the negative z-axis pointing in the viewing direction. (This is commonly referred to as right-handed coordinates.)

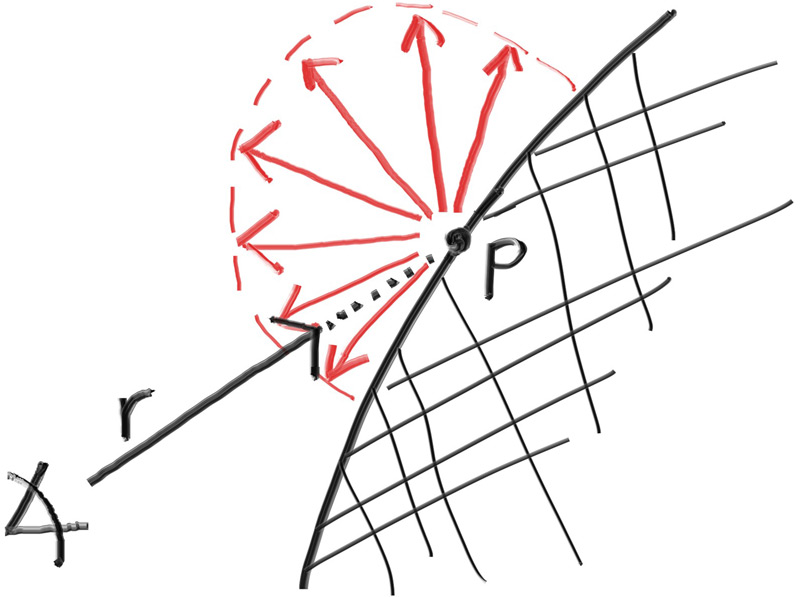

Figure 3: Camera geometry

Now the inevitable tricky part. While our 3D space has the conventions above, this conflicts with our image coordinates, where we want to have the zeroth pixel in the top-left and work our way down to the last pixel at the bottom right. This means that our image coordinate Y-axis is inverted: Y increases going down the image.

As we scan our image, we will start at the upper left pixel (pixel \( 0,0 \)), scan left-to-right across each row, and then scan row-by-row, top-to-bottom. To help navigate the pixel grid, we'll use a vector from the left edge to the right edge (\( \mathbf{V_u} \)), and a vector from the upper edge to the lower edge (\( \mathbf{V_v} \)).

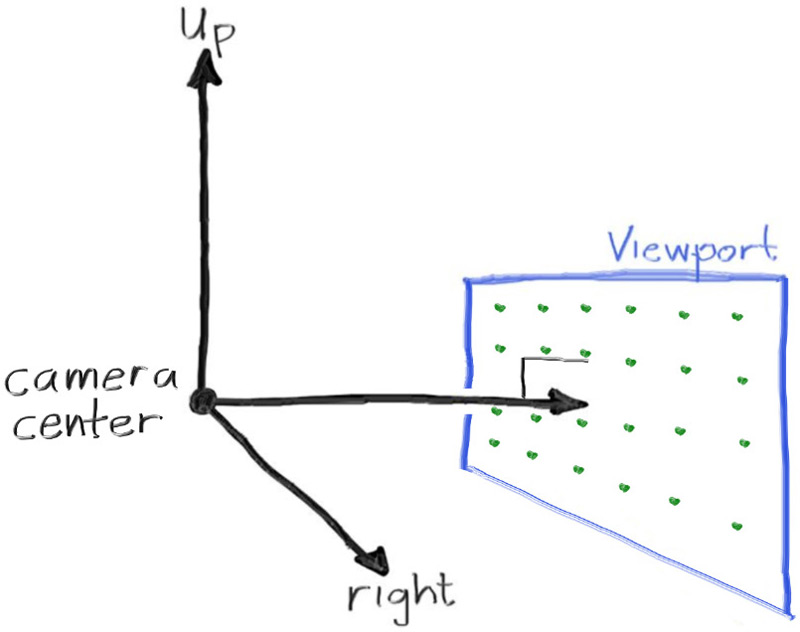

Our pixel grid will be inset from the viewport edges by half the pixel-to-pixel distance. This way, our viewport area is evenly divided into width × height identical regions. Here's what our viewport and pixel grid look like:

![]()

Figure 4: Viewport and pixel grid

In this figure, we have the viewport, the pixel grid for a 7×5 resolution image, the viewport upper left corner \( \mathbf{Q} \), the pixel \( \mathbf{P_{0,0}} \) location, the viewport vector \( \mathbf{V_u} \) (viewport_u), the viewport vector \( \mathbf{V_v} \) (viewport_v), and the pixel delta vectors \( \mathbf{\Delta u} \) and \( \mathbf{\Delta v} \).

Drawing from all of this, here's the code that implements the camera. We'll stub in a function ray_color(const ray& r) that returns the color for a given scene ray — which we'll set to always return black for now.

diff --git a/src/main.rs b/src/main.rs

index bb37ee6..8104ae8 100644

--- a/src/main.rs

+++ b/src/main.rs

@@ -1,30 +1,65 @@

-use code::color::{Color, write_color};

+use code::{

+ color::{Color, write_color},

+ ray::Ray,

+ vec3::{Point3, Vec3},

+};

+

+fn ray_color(r: Ray) -> Color {

+ Color::new(0.0, 0.0, 0.0)

+}

fn main() -> Result<(), Box<dyn std::error::Error>> {

// Image

- const IMAGE_WIDTH: u32 = 256;

- const IMAGE_HEIGHT: u32 = 256;

+ const ASPECT_RATIO: f64 = 16.0 / 9.0;

+ const IMAGE_WIDTH: i32 = 400;

+

+ // Calculate the image height, and ensure that it's at least 1.

+ const IMAGE_HEIGHT: i32 = {

+ let image_height = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

+ if image_height < 1 { 1 } else { image_height }

+ };

+

+ // Camera

+

+ let focal_length = 1.0;

+ let viewport_height = 2.0;

+ let viewport_width = viewport_height * (IMAGE_WIDTH as f64) / (IMAGE_HEIGHT as f64);

+ let camera_center = Point3::new(0.0, 0.0, 0.0);

+

+ // Calculate the vectors across the horizontal and down the vertical viewport edges.

+ let viewport_u = Vec3::new(viewport_width, 0.0, 0.0);

+ let viewport_v = Vec3::new(0.0, -viewport_height, 0.0);

+

+ // Calculate the horizontal and vertical delta vectors from pixel to pixel.

+ let pixel_delta_u = viewport_u / IMAGE_WIDTH as f64;

+ let pixel_delta_v = viewport_v / IMAGE_HEIGHT as f64;

+

+ // Calculate the location of the upper left pixel.

+ let viewport_upper_left =

+ camera_center - Vec3::new(0.0, 0.0, focal_length) - viewport_u / 2.0 - viewport_v / 2.0;

+ let pixel00_loc = viewport_upper_left + 0.5 * (pixel_delta_u + pixel_delta_v);

// Render

env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

- let pixel_color = Color::new(

- i as f64 / (IMAGE_WIDTH - 1) as f64,

- j as f64 / (IMAGE_HEIGHT - 1) as f64,

- 0.0,

- );

+ let pixel_center =

+ pixel00_loc + (i as f64) * pixel_delta_u + (j as f64) * pixel_delta_v;

+ let ray_direction = pixel_center - camera_center;

+ let r = Ray::new(camera_center, ray_direction);

+

+ let pixel_color = ray_color(r);

write_color(std::io::stdout(), pixel_color)?;

}

}

log::info!("Done.");

Ok(())

}Listing 9: [main.rs] Creating scene rays

Notice that in the code above, I didn't make ray_direction a unit vector, because I think not doing that makes for simpler and slightly faster code.

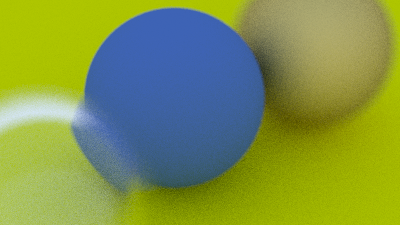

Now we'll fill in the ray_color(ray) function to implement a simple gradient. This function will linearly blend white and blue depending on the height of the \( y \) coordinate after scaling the ray direction to unit length (so \( -1.0 < y < 1.0 \)). Because we're looking at the 𝑦 height after normalizing the vector, you'll notice a horizontal gradient to the color in addition to the vertical gradient.

I'll use a standard graphics trick to linearly scale \( 0.0 \leq a \leq 1.0 \). When \( a = 1.0 \), I want blue. When \( a = 0.0 \), I want white. In between, I want a blend. This forms a “linear blend”, or “linear interpolation”. This is commonly referred to as a lerp between two values. A lerp is always of the form

\[ blendedValue = (1 − 𝑎) \cdot startValue + 𝑎 \cdot endValue, \]

with \( a \) going from zero to one.

Putting all this together, here's what we get:

diff --git a/src/main.rs b/src/main.rs

index 8104ae8..f31dc16 100644

--- a/src/main.rs

+++ b/src/main.rs

@@ -1,65 +1,67 @@

use code::{

color::{Color, write_color},

ray::Ray,

- vec3::{Point3, Vec3},

+ vec3::{Point3, Vec3, unit_vector},

};

fn ray_color(r: Ray) -> Color {

- Color::new(0.0, 0.0, 0.0)

+ let unit_direction = unit_vector(r.direction());

+ let a = 0.5 * (unit_direction.y() + 1.0);

+ (1.0 - a) * Color::new(1.0, 1.0, 1.0) + a * Color::new(0.5, 0.7, 1.0)

}

fn main() -> Result<(), Box<dyn std::error::Error>> {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

// Calculate the image height, and ensure that it's at least 1.

const IMAGE_HEIGHT: i32 = {

let image_height = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

if image_height < 1 { 1 } else { image_height }

};

// Camera

let focal_length = 1.0;

let viewport_height = 2.0;

let viewport_width = viewport_height * (IMAGE_WIDTH as f64) / (IMAGE_HEIGHT as f64);

let camera_center = Point3::new(0.0, 0.0, 0.0);

// Calculate the vectors across the horizontal and down the vertical viewport edges.

let viewport_u = Vec3::new(viewport_width, 0.0, 0.0);

let viewport_v = Vec3::new(0.0, -viewport_height, 0.0);

// Calculate the horizontal and vertical delta vectors from pixel to pixel.

let pixel_delta_u = viewport_u / IMAGE_WIDTH as f64;

let pixel_delta_v = viewport_v / IMAGE_HEIGHT as f64;

// Calculate the location of the upper left pixel.

let viewport_upper_left =

camera_center - Vec3::new(0.0, 0.0, focal_length) - viewport_u / 2.0 - viewport_v / 2.0;

let pixel00_loc = viewport_upper_left + 0.5 * (pixel_delta_u + pixel_delta_v);

// Render

env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

let pixel_center =

pixel00_loc + (i as f64) * pixel_delta_u + (j as f64) * pixel_delta_v;

let ray_direction = pixel_center - camera_center;

let r = Ray::new(camera_center, ray_direction);

let pixel_color = ray_color(r);

write_color(std::io::stdout(), pixel_color)?;

}

}

log::info!("Done.");

Ok(())

}Listing 10: [main.rs] Rendering a blue-to-white gradient

In our case this produces:

Image 2: A blue-to-white gradient depending on ray Y coordinate

Adding a Sphere

Let’s add a single object to our ray tracer. People often use spheres in ray tracers because calculating whether a ray hits a sphere is relatively simple.

Ray-Sphere Intersection

The equation for a sphere of radius \( r \) that is centered at the origin is an important mathematical equation:

\[ x^2 + y^2 + z^2 = r^2 \]

You can also think of this as saying that if a given point \( (x, y, z) \) is on the surface of the sphere, then \( x^2 + y^2 + z^2 = r^2 \). If a given point \( (x, y, z) \) is inside the sphere, then \( x^2 + y^2 + z^2 < r^2 \), and if a given point \( (x, y, z) \) is outside the sphere, then \( x^2 + y^2 + z^2 > r^2 \).

If we want to allow the sphere center to be at an arbitrary point \( (C_x, C_y, C_z) \), then the equation becomes a lot less nice:

\[ (C_x - x)^2 + (C_y - y)^2 + (C_z - z)^2 = r^2 \]

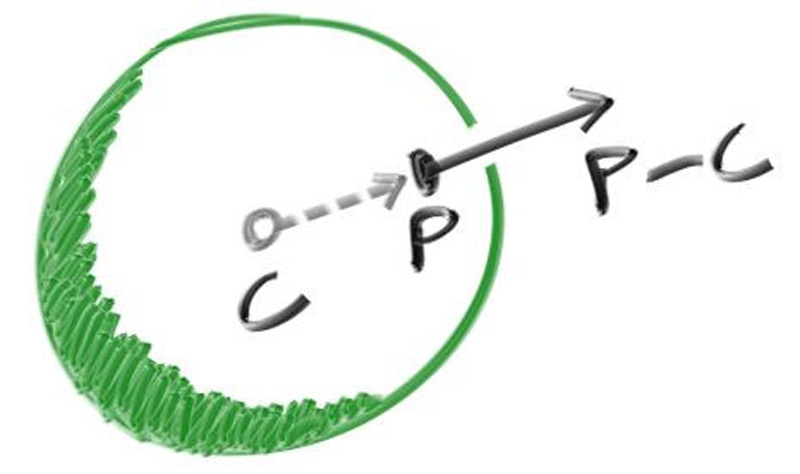

In graphics, you almost always want your formulas to be in terms of vectors so that all the \( x/y/z \) stuff can be simply represented using a vec3 class. You might note that the vector from point \( \mathbf{P} = (x, y, z) \) to center \( \mathbf{C} = (C_x, C_y, C_z) \) is \( (\mathbf{C} - \mathbf{P}) \).

If we use the definition of the dot product:

\[ (\mathbf{C} - \mathbf{P}) \cdot (\mathbf{C} - \mathbf{P}) = (C_x - x)^2 + (C_y - y)^2 + (C_z - z)^2 \] Then we can rewrite the equation of the sphere in vector form as:

\[ (\mathbf{C} - \mathbf{P}) \cdot (\mathbf{C} - \mathbf{P}) = r^2 \]

We can read this as “any point \( \mathbf{P} \) that satisfies this equation is on the sphere”. We want to know if our ray \( \mathbf{P}(t) = \mathbf{Q} + t \mathbf{d} \) ever hits the sphere anywhere. If it does hit the sphere, there is some \( t \) for which \( \mathbf{P}(t) \) satisfies the sphere equation. So we are looking for any \( t \) where this is true:

\[ (\mathbf{C} - \mathbf{P}(t)) \cdot (\mathbf{C} - \mathbf{P}(t)) = r^2 \]

which can be found by replacing \( \mathbf{P}(t) \) with its expanded form:

\[ (\mathbf{C} - (\mathbf{Q} + t \mathbf{d})) \cdot (\mathbf{C} - (\mathbf{Q} + t \mathbf{d})) = r^2 \]

We have three vectors on the left dotted by three vectors on the right. If we solved for the full dot product we would get nine vectors. You can definitely go through and write everything out, but we don't need to work that hard. If you remember, we want to solve for \( t \), so we'll separate the terms based on whether there is a \( t \) or not:

\[ (-t \mathbf{d} + (\mathbf{C} - \mathbf{Q})) \cdot (-t \mathbf{d} + (\mathbf{C} - \mathbf{Q})) = r^2 \]

And now we follow the rules of vector algebra to distribute the dot product:

\[ t^2 \mathbf{d} \cdot \mathbf{d} - 2 t \mathbf{d} \cdot (\mathbf{C} - \mathbf{Q}) + (\mathbf{C} - \mathbf{Q}) \cdot (\mathbf{C} - \mathbf{Q}) = r^2 \]

Move the square of the radius over to the left hand side:

\[ t^2 \mathbf{d} \cdot \mathbf{d} - 2 t \mathbf{d} \cdot (\mathbf{C} - \mathbf{Q}) + (\mathbf{C} - \mathbf{Q}) \cdot (\mathbf{C} - \mathbf{Q}) - r^2 = 0 \]

It's hard to make out what exactly this equation is, but the vectors and \( r \) in that equation are all constant and known. Furthermore, the only vectors that we have are reduced to scalars by dot product. The only unknown is \( t \), and we have a \( t^2 \), which means that this equation is quadratic. You can solve for a quadratic equation \( ax^2 + bx + c = 0 \) by using the quadratic formula:

\[ \frac{-b \pm \sqrt{b^2 - 4ac}}{2a} \]

So solving for \( t \) in the ray-sphere intersection equation gives us these values for \( a \), \( b \), and \( c \):

\[ a = \mathbf{d} \cdot \mathbf{d} \] \[ b = -2 \mathbf{d} \cdot (\mathbf{C} - \mathbf{Q}) \] \[ c = (\mathbf{C} - \mathbf{Q}) \cdot (\mathbf{C} - \mathbf{Q}) - r^2 \]

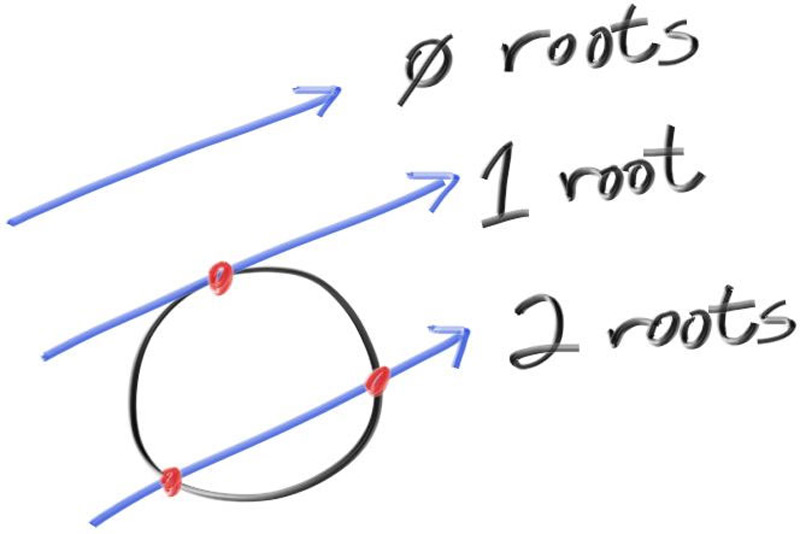

Using all of the above you can solve for \( t \), but there is a square root part that can be either positive (meaning two real solutions), negative (meaning no real solutions), or zero (meaning one real solution). In graphics, the algebra almost always relates very directly to the geometry. What we have is:

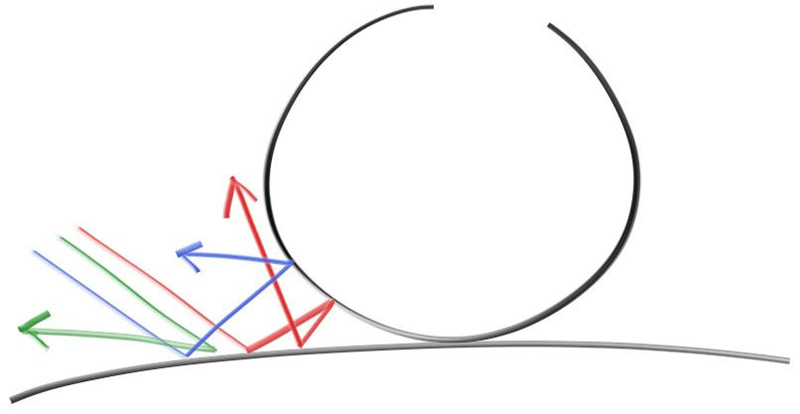

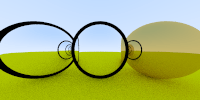

Figure 5: Ray-sphere intersection results

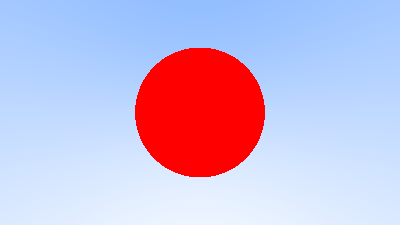

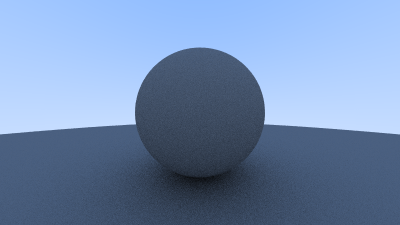

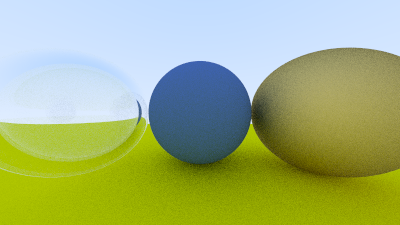

Creating Our First Raytraced Image

If we take that math and hard-code it into our program, we can test our code by placing a small sphere at \( −1 \) on the z-axis and then coloring red any pixel that intersects it.

diff --git a/src/main.rs b/src/main.rs

index f31dc16..e3d9091 100644

--- a/src/main.rs

+++ b/src/main.rs

@@ -1,67 +1,81 @@

use code::{

color::{Color, write_color},

ray::Ray,

- vec3::{Point3, Vec3, unit_vector},

+ vec3::{Point3, Vec3, dot, unit_vector},

};

+fn hit_sphere(center: Point3, radius: f64, r: Ray) -> bool {

+ let oc = center - r.origin();

+ let a = dot(r.direction(), r.direction());

+ let b = -2.0 * dot(r.direction(), oc);

+ let c = dot(oc, oc) - radius * radius;

+ let discriminant = b * b - 4.0 * a * c;

+

+ discriminant >= 0.0

+}

+

fn ray_color(r: Ray) -> Color {

+ if hit_sphere(Point3::new(0.0, 0.0, -1.0), 0.5, r) {

+ return Color::new(1.0, 0.0, 0.0);

+ }

+

let unit_direction = unit_vector(r.direction());

let a = 0.5 * (unit_direction.y() + 1.0);

(1.0 - a) * Color::new(1.0, 1.0, 1.0) + a * Color::new(0.5, 0.7, 1.0)

}

fn main() -> Result<(), Box<dyn std::error::Error>> {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

// Calculate the image height, and ensure that it's at least 1.

const IMAGE_HEIGHT: i32 = {

let image_height = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

if image_height < 1 { 1 } else { image_height }

};

// Camera

let focal_length = 1.0;

let viewport_height = 2.0;

let viewport_width = viewport_height * (IMAGE_WIDTH as f64) / (IMAGE_HEIGHT as f64);

let camera_center = Point3::new(0.0, 0.0, 0.0);

// Calculate the vectors across the horizontal and down the vertical viewport edges.

let viewport_u = Vec3::new(viewport_width, 0.0, 0.0);

let viewport_v = Vec3::new(0.0, -viewport_height, 0.0);

// Calculate the horizontal and vertical delta vectors from pixel to pixel.

let pixel_delta_u = viewport_u / IMAGE_WIDTH as f64;

let pixel_delta_v = viewport_v / IMAGE_HEIGHT as f64;

// Calculate the location of the upper left pixel.

let viewport_upper_left =

camera_center - Vec3::new(0.0, 0.0, focal_length) - viewport_u / 2.0 - viewport_v / 2.0;

let pixel00_loc = viewport_upper_left + 0.5 * (pixel_delta_u + pixel_delta_v);

// Render

env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

let pixel_center =

pixel00_loc + (i as f64) * pixel_delta_u + (j as f64) * pixel_delta_v;

let ray_direction = pixel_center - camera_center;

let r = Ray::new(camera_center, ray_direction);

let pixel_color = ray_color(r);

write_color(std::io::stdout(), pixel_color)?;

}

}

log::info!("Done.");

Ok(())

}Listing 11: [main.rs] Rendering a red sphere

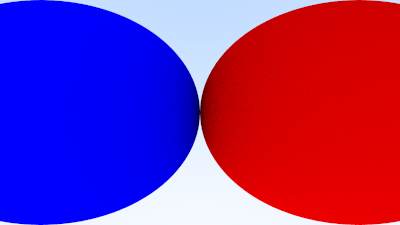

What we get is this:

Image 3: A simple red sphere

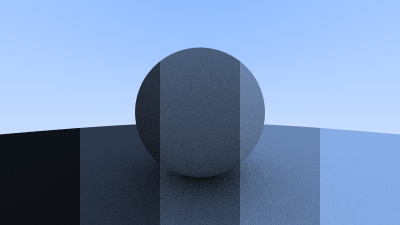

Shading with Surface Normals

First, let’s get ourselves a surface normal so we can shade. This is a vector that is perpendicular to the surface at the point of intersection.

We have a key design decision to make for normal vectors in our code: whether normal vectors will have an arbitrary length, or will be normalized to unit length.

It is tempting to skip the expensive square root operation involved in normalizing the vector, in case it's not needed. In practice, however, there are three important observations. First, if a unit-length normal vector is ever required, then you might as well do it up front once, instead of over and over again “just in case” for every location where unit-length is required. Second, we do require unit-length normal vectors in several places. Third, if you require normal vectors to be unit length, then you can often efficiently generate that vector with an understanding of the specific geometry class, in its constructor, or in the hit() function. For example, sphere normals can be made unit length simply by dividing by the sphere radius, avoiding the square root entirely.

Given all of this, we will adopt the policy that all normal vectors will be of unit length.

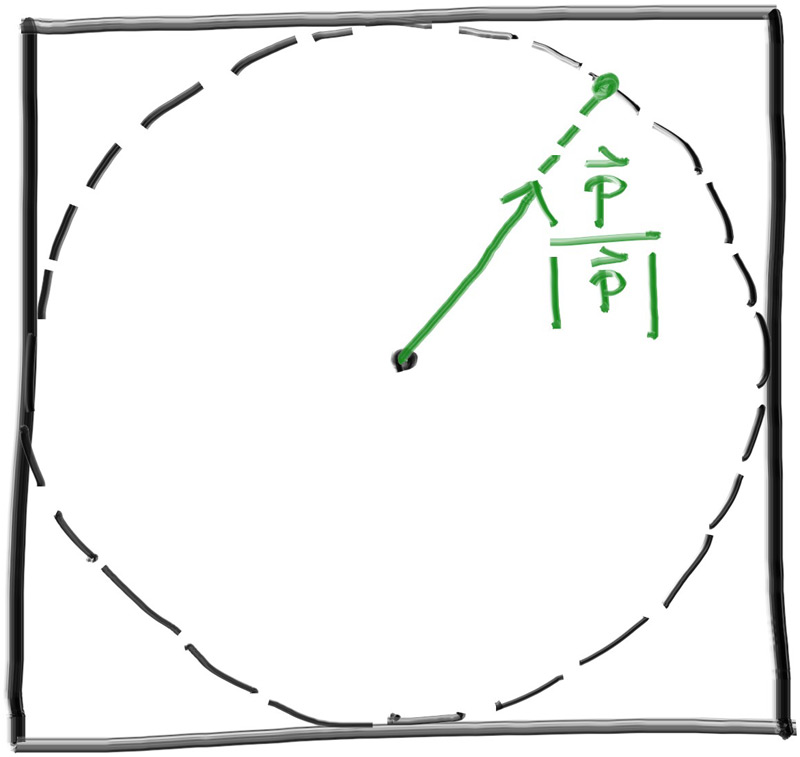

For a sphere, the outward normal is in the direction of the hit point minus the center:

Figure 6: Sphere surface-normal geometry

On the earth, this means that the vector from the earth’s center to you points straight up. Let’s throw that into the code now, and shade it. We don’t have any lights or anything yet, so let’s just visualize the normals with a color map. A common trick used for visualizing normals (because it’s easy and somewhat intuitive to assume \( \mathbf{n} \) is a unit length vector — so each component is between \( −1 \) and \( 1 \)) is to map each component to the interval from \( 0 \) to \( 1 \), and then map \( (x, y, z) \) to \( (red, green, blue) \). For the normal, we need the hit point, not just whether we hit or not (which is all we're calculating at the moment). We only have one sphere in the scene, and it's directly in front of the camera, so we won't worry about negative values of \( t \) yet. We'll just assume the closest hit point (smallest \( t \)) is the one that we want. These changes in the code let us compute and visualize \( \mathbf{n} \):

diff --git a/src/main.rs b/src/main.rs

index e3d9091..405ca4b 100644

--- a/src/main.rs

+++ b/src/main.rs

@@ -1,81 +1,82 @@

use code::{

color::{Color, write_color},

ray::Ray,

vec3::{Point3, Vec3, dot, unit_vector},

};

-fn hit_sphere(center: Point3, radius: f64, r: Ray) -> bool {

+fn hit_sphere(center: Point3, radius: f64, r: Ray) -> Option<f64> {

let oc = center - r.origin();

let a = dot(r.direction(), r.direction());

let b = -2.0 * dot(r.direction(), oc);

let c = dot(oc, oc) - radius * radius;

let discriminant = b * b - 4.0 * a * c;

- discriminant >= 0.0

+ (discriminant >= 0.0).then(|| (-b - f64::sqrt(discriminant)) / (2.0 * a))

}

fn ray_color(r: Ray) -> Color {

- if hit_sphere(Point3::new(0.0, 0.0, -1.0), 0.5, r) {

- return Color::new(1.0, 0.0, 0.0);

+ if let Some(t) = hit_sphere(Point3::new(0.0, 0.0, -1.0), 0.5, r) {

+ let n = unit_vector(r.at(t) - Vec3::new(0.0, 0.0, -1.0));

+ return 0.5 * Color::new(n.x() + 1.0, n.y() + 1.0, n.z() + 1.0);

}

let unit_direction = unit_vector(r.direction());

let a = 0.5 * (unit_direction.y() + 1.0);

(1.0 - a) * Color::new(1.0, 1.0, 1.0) + a * Color::new(0.5, 0.7, 1.0)

}

fn main() -> Result<(), Box<dyn std::error::Error>> {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

// Calculate the image height, and ensure that it's at least 1.

const IMAGE_HEIGHT: i32 = {

let image_height = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

if image_height < 1 { 1 } else { image_height }

};

// Camera

let focal_length = 1.0;

let viewport_height = 2.0;

let viewport_width = viewport_height * (IMAGE_WIDTH as f64) / (IMAGE_HEIGHT as f64);

let camera_center = Point3::new(0.0, 0.0, 0.0);

// Calculate the vectors across the horizontal and down the vertical viewport edges.

let viewport_u = Vec3::new(viewport_width, 0.0, 0.0);

let viewport_v = Vec3::new(0.0, -viewport_height, 0.0);

// Calculate the horizontal and vertical delta vectors from pixel to pixel.

let pixel_delta_u = viewport_u / IMAGE_WIDTH as f64;

let pixel_delta_v = viewport_v / IMAGE_HEIGHT as f64;

// Calculate the location of the upper left pixel.

let viewport_upper_left =

camera_center - Vec3::new(0.0, 0.0, focal_length) - viewport_u / 2.0 - viewport_v / 2.0;

let pixel00_loc = viewport_upper_left + 0.5 * (pixel_delta_u + pixel_delta_v);

// Render

env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

let pixel_center =

pixel00_loc + (i as f64) * pixel_delta_u + (j as f64) * pixel_delta_v;

let ray_direction = pixel_center - camera_center;

let r = Ray::new(camera_center, ray_direction);

let pixel_color = ray_color(r);

write_color(std::io::stdout(), pixel_color)?;

}

}

log::info!("Done.");

Ok(())

}Listing 12: [main.rs] Rendering surface normals on a sphere

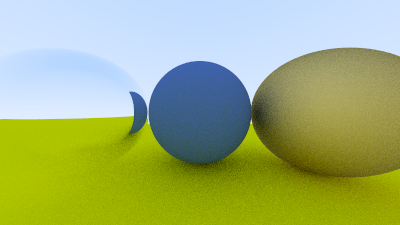

And that yields this picture:

Image 4: A sphere colored according to its normal vectors

Simplifying the Ray-Sphere Intersection Code

Let’s revisit the ray-sphere function:

use code::{

color::{Color, write_color},

ray::Ray,

vec3::{Point3, Vec3, dot, unit_vector},

};

fn hit_sphere(center: Point3, radius: f64, r: Ray) -> Option<f64> {

let oc = center - r.origin();

let a = dot(r.direction(), r.direction());

let b = -2.0 * dot(r.direction(), oc);

let c = dot(oc, oc) - radius * radius;

let discriminant = b * b - 4.0 * a * c;

(discriminant >= 0.0).then(|| (-b - f64::sqrt(discriminant)) / (2.0 * a))

}

fn ray_color(r: Ray) -> Color {

if let Some(t) = hit_sphere(Point3::new(0.0, 0.0, -1.0), 0.5, r) {

let n = unit_vector(r.at(t) - Vec3::new(0.0, 0.0, -1.0));

return 0.5 * Color::new(n.x() + 1.0, n.y() + 1.0, n.z() + 1.0);

}

let unit_direction = unit_vector(r.direction());

let a = 0.5 * (unit_direction.y() + 1.0);

(1.0 - a) * Color::new(1.0, 1.0, 1.0) + a * Color::new(0.5, 0.7, 1.0)

}

fn main() -> Result<(), Box<dyn std::error::Error>> {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

// Calculate the image height, and ensure that it's at least 1.

const IMAGE_HEIGHT: i32 = {

let image_height = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

if image_height < 1 { 1 } else { image_height }

};

// Camera

let focal_length = 1.0;

let viewport_height = 2.0;

let viewport_width = viewport_height * (IMAGE_WIDTH as f64) / (IMAGE_HEIGHT as f64);

let camera_center = Point3::new(0.0, 0.0, 0.0);

// Calculate the vectors across the horizontal and down the vertical viewport edges.

let viewport_u = Vec3::new(viewport_width, 0.0, 0.0);

let viewport_v = Vec3::new(0.0, -viewport_height, 0.0);

// Calculate the horizontal and vertical delta vectors from pixel to pixel.

let pixel_delta_u = viewport_u / IMAGE_WIDTH as f64;

let pixel_delta_v = viewport_v / IMAGE_HEIGHT as f64;

// Calculate the location of the upper left pixel.

let viewport_upper_left =

camera_center - Vec3::new(0.0, 0.0, focal_length) - viewport_u / 2.0 - viewport_v / 2.0;

let pixel00_loc = viewport_upper_left + 0.5 * (pixel_delta_u + pixel_delta_v);

// Render

env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

let pixel_center =

pixel00_loc + (i as f64) * pixel_delta_u + (j as f64) * pixel_delta_v;

let ray_direction = pixel_center - camera_center;

let r = Ray::new(camera_center, ray_direction);

let pixel_color = ray_color(r);

write_color(std::io::stdout(), pixel_color)?;

}

}

log::info!("Done.");

Ok(())

}Listing 13: [main.rs] Ray-sphere intersection code (before)

First, recall that a vector dotted with itself is equal to the squared length of that vector.

Second, notice how the equation for \( b \) has a factor of negative two in it. Consider what happens to the quadratic equation if \( b = 2h \):

\[ \frac{-b \pm \sqrt{b^2 - 4ac}}{2a}\] \[= \frac{-(-2h) \pm \sqrt{(-2h)^2 - 4ac}}{2a}\] \[= \frac{2h \pm 2 \sqrt{h^2 - ac}}{2a}\] \[= \frac{h \pm \sqrt{h^2 - ac}}{a}\]

This simplifies nicely, so we'll use it. So solving for \( h \):

\[b = -2 \mathbf{d} \cdot (\mathbf{C} - \mathbf{Q}) \] \[b = -2h \] \[h = \frac{b}{-2} = \mathbf{d} \cdot (\mathbf{C} - \mathbf{Q}) \]

Using these observations, we can now simplify the sphere-intersection code to this:

diff --git a/src/main.rs b/src/main.rs

index 405ca4b..1f26e3d 100644

--- a/src/main.rs

+++ b/src/main.rs

@@ -1,82 +1,82 @@

use code::{

color::{Color, write_color},

ray::Ray,

vec3::{Point3, Vec3, dot, unit_vector},

};

fn hit_sphere(center: Point3, radius: f64, r: Ray) -> Option<f64> {

let oc = center - r.origin();

- let a = dot(r.direction(), r.direction());

- let b = -2.0 * dot(r.direction(), oc);

- let c = dot(oc, oc) - radius * radius;

- let discriminant = b * b - 4.0 * a * c;

+ let a = r.direction().length_squared();

+ let h = dot(r.direction(), oc);

+ let c = oc.length_squared() - radius * radius;

+ let discriminant = h * h - a * c;

- (discriminant >= 0.0).then(|| (-b - f64::sqrt(discriminant)) / (2.0 * a))

+ (discriminant >= 0.0).then(|| (h - f64::sqrt(discriminant)) / a)

}

fn ray_color(r: Ray) -> Color {

if let Some(t) = hit_sphere(Point3::new(0.0, 0.0, -1.0), 0.5, r) {

let n = unit_vector(r.at(t) - Vec3::new(0.0, 0.0, -1.0));

return 0.5 * Color::new(n.x() + 1.0, n.y() + 1.0, n.z() + 1.0);

}

let unit_direction = unit_vector(r.direction());

let a = 0.5 * (unit_direction.y() + 1.0);

(1.0 - a) * Color::new(1.0, 1.0, 1.0) + a * Color::new(0.5, 0.7, 1.0)

}

fn main() -> Result<(), Box<dyn std::error::Error>> {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

// Calculate the image height, and ensure that it's at least 1.

const IMAGE_HEIGHT: i32 = {

let image_height = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

if image_height < 1 { 1 } else { image_height }

};

// Camera

let focal_length = 1.0;

let viewport_height = 2.0;

let viewport_width = viewport_height * (IMAGE_WIDTH as f64) / (IMAGE_HEIGHT as f64);

let camera_center = Point3::new(0.0, 0.0, 0.0);

// Calculate the vectors across the horizontal and down the vertical viewport edges.

let viewport_u = Vec3::new(viewport_width, 0.0, 0.0);

let viewport_v = Vec3::new(0.0, -viewport_height, 0.0);

// Calculate the horizontal and vertical delta vectors from pixel to pixel.

let pixel_delta_u = viewport_u / IMAGE_WIDTH as f64;

let pixel_delta_v = viewport_v / IMAGE_HEIGHT as f64;

// Calculate the location of the upper left pixel.

let viewport_upper_left =

camera_center - Vec3::new(0.0, 0.0, focal_length) - viewport_u / 2.0 - viewport_v / 2.0;

let pixel00_loc = viewport_upper_left + 0.5 * (pixel_delta_u + pixel_delta_v);

// Render

env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

let pixel_center =

pixel00_loc + (i as f64) * pixel_delta_u + (j as f64) * pixel_delta_v;

let ray_direction = pixel_center - camera_center;

let r = Ray::new(camera_center, ray_direction);

let pixel_color = ray_color(r);

write_color(std::io::stdout(), pixel_color)?;

}

}

log::info!("Done.");

Ok(())

}Listing 14: [main.rs] Ray-sphere intersection code (after)

An Abstraction for Hittable Objects

Now, how about more than one sphere? While it is tempting to have an array of spheres, a very clean solution is to make an “abstract class” for anything a ray might hit, and make both a sphere and a list of spheres just something that can be hit. What that class should be called is something of a quandary — calling it an “object” would be good if not for “object oriented” programming. “Surface” is often used, with the weakness being maybe we will want volumes (fog, clouds, stuff like that). “hittable” emphasizes the member function that unites them. I don’t love any of these, but we'll go with “hittable”.

This hittable abstract class will have a hit function that takes in a ray. 1 Most ray tracers have found it convenient to add a valid interval for hits \( t_{min} \) to \( t_{max} \), so the hit only “counts” if \( t_{min} < t < t_{max} \). For the initial rays this is positive \( t \), but as we will see, it can simplify our code to have an interval \( t_{min} \) to \( t_{max} \). One design question is whether to do things like compute the normal if we hit something. We might end up hitting something closer as we do our search, and we will only need the normal of the closest thing. I will go with the simple solution and compute a bundle of stuff I will store in some structure. Here’s the abstract class:

use crate::{

ray::Ray,

vec3::{Point3, Vec3},

};

#[derive(Debug, Default, Clone, Copy)]

pub struct HitRecord {

pub p: Point3,

pub normal: Vec3,

pub t: f64,

}

pub trait Hittable {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord>;

}Listing 15: [hittable.rs] The hittable class

And here’s the sphere:

use crate::{

hittable::{HitRecord, Hittable},

ray::Ray,

vec3::{Point3, dot},

};

#[derive(Debug, Clone, Copy)]

pub struct Sphere {

center: Point3,

radius: f64,

}

impl Sphere {

pub fn new(center: Point3, radius: f64) -> Self {

Self {

center,

radius: f64::max(0.0, radius),

}

}

}

impl Hittable for Sphere {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord> {

let oc = self.center - r.origin();

let a = r.direction().length_squared();

let h = dot(r.direction(), oc);

let c = oc.length_squared() - self.radius * self.radius;

let discriminant = h * h - a * c;

if discriminant < 0.0 {

return None;

}

let sqrtd = f64::sqrt(discriminant);

// Find the nearest root that lies in the acceptable range.

let mut root = (h - sqrtd) / a;

if root <= ray_tmin || ray_tmax <= root {

root = (h + sqrtd) / a;

if root <= ray_tmin || ray_tmax <= root {

return None;

}

}

let t = root;

let p = r.at(t);

let rec = HitRecord {

t,

p,

normal: (p - self.center) / self.radius,

};

Some(rec)

}

}Listing 16: [sphere.rs] The sphere class

(Note here that we use the C++ standard function std::fmax(), which returns the maximum of the two floating-point arguments. Similarly, we will later use std::fmin(), which returns the minimum of the two floating-point arguments.) 2

-

A simple Rust Trait will be used instead. ↩

-

The Rust standard library provides the functions

f64::max()andf64::min(). ↩

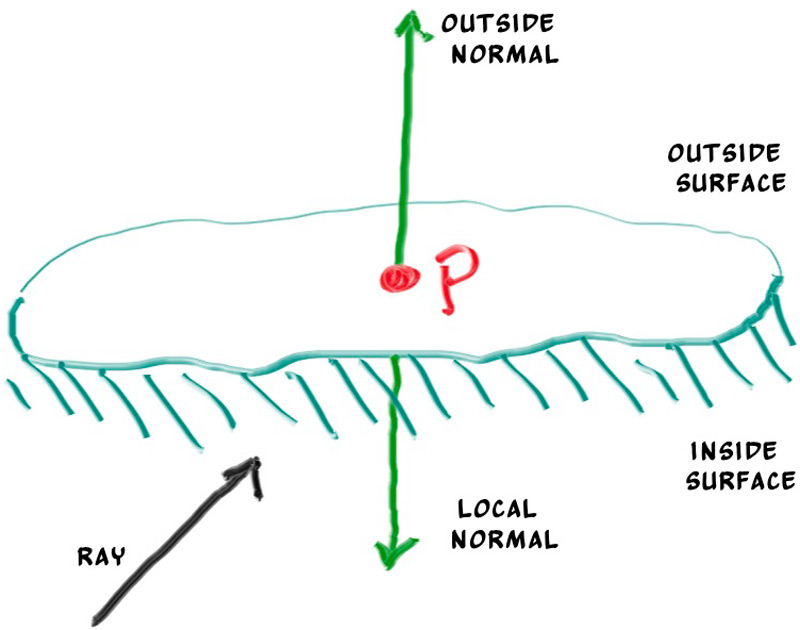

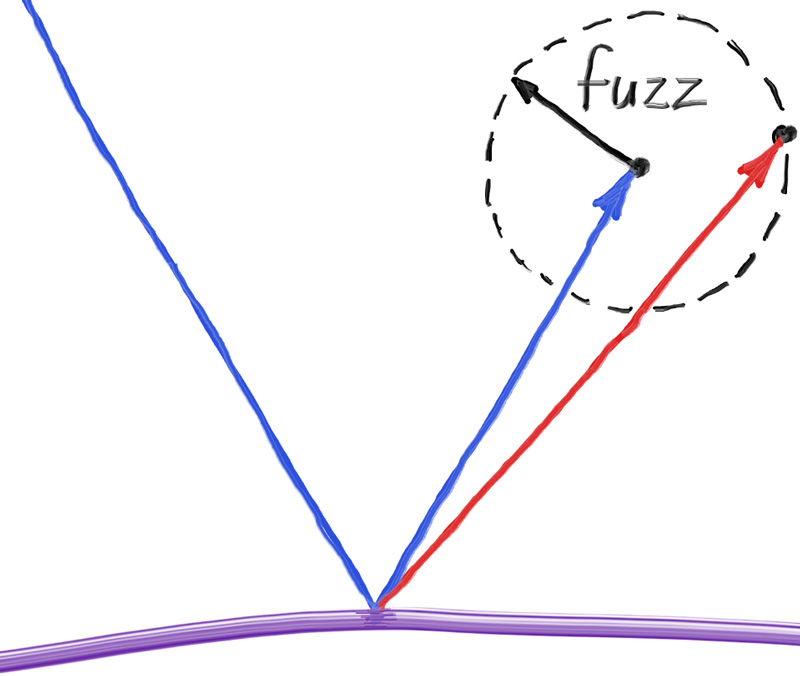

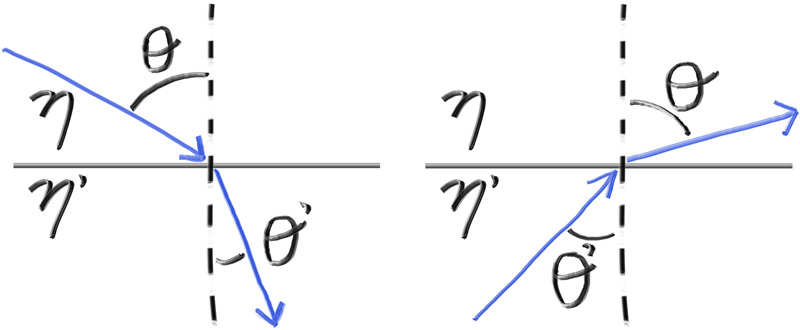

Front Faces Versus Back Faces

The second design decision for normals is whether they should always point out. At present, the normal found will always be in the direction of the center to the intersection point (the normal points out). If the ray intersects the sphere from the outside, the normal points against the ray. If the ray intersects the sphere from the inside, the normal (which always points out) points with the ray. Alternatively, we can have the normal always point against the ray. If the ray is outside the sphere, the normal will point outward, but if the ray is inside the sphere, the normal will point inward.

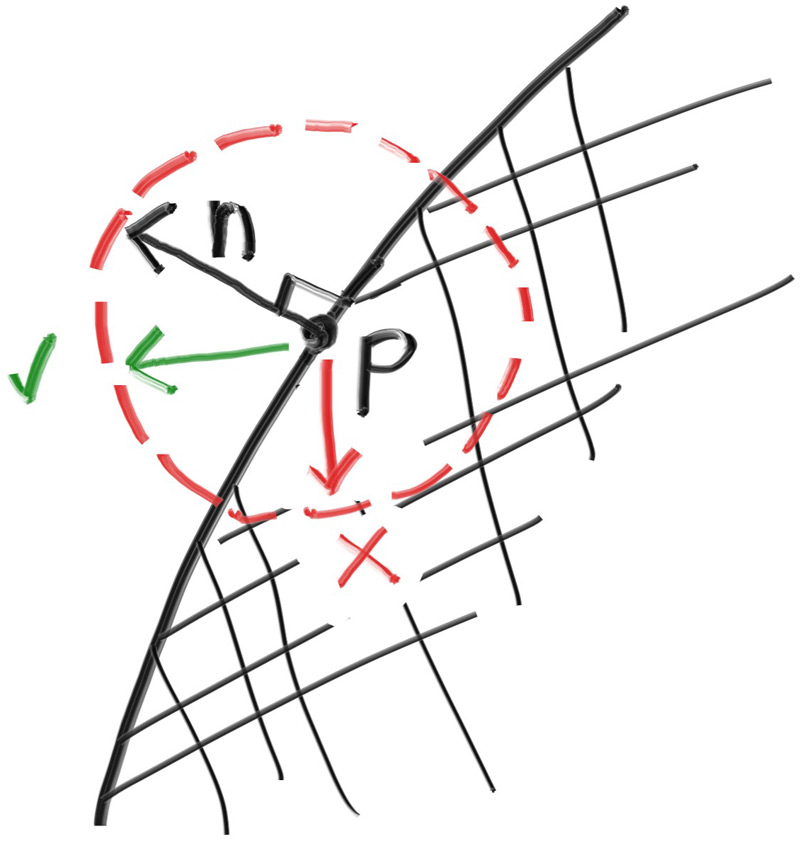

Figure 7: Possible directions for sphere surface-normal geometry

We need to choose one of these possibilities because we will eventually want to determine which side of the surface that the ray is coming from. This is important for objects that are rendered differently on each side, like the text on a two-sided sheet of paper, or for objects that have an inside and an outside, like glass balls.

If we decide to have the normals always point out, then we will need to determine which side the ray is on when we color it. We can figure this out by comparing the ray with the normal. If the ray and the normal face in the same direction, the ray is inside the object, if the ray and the normal face in the opposite direction, then the ray is outside the object. This can be determined by taking the dot product of the two vectors, where if their dot is positive, the ray is inside the sphere.

use crate::{

ray::Ray,

vec3::{Point3, Vec3, dot},

};

#[derive(Debug, Default, Clone, Copy)]

pub struct HitRecord {

pub p: Point3,

pub normal: Vec3,

pub t: f64,

pub front_face: bool,

}

impl HitRecord {

pub fn set_face_normal(&mut self, r: Ray, outward_normal: Vec3) {

// Sets the hit record normal vector.

// NOTE: the parameter `outward_normal` is assumed to have unit length.

let normal;

let front_face;

if dot(r.direction(), outward_normal) > 0.0 {

// ray is inside the sphere

normal = -outward_normal;

front_face = false;

} else {

// ray is outside the sphere

normal = outward_normal;

front_face = true;

}

self.front_face = front_face;

self.normal = normal;

}

}

pub trait Hittable {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord>;

}Listing 17: Comparing the ray and the normal

If we decide to have the normals always point against the ray, we won't be able to use the dot product to determine which side of the surface the ray is on. Instead, we would need to store that information:

use crate::{

ray::Ray,

vec3::{Point3, Vec3, dot},

};

#[derive(Debug, Default, Clone, Copy)]

pub struct HitRecord {

pub p: Point3,

pub normal: Vec3,

pub t: f64,

pub front_face: bool,

}

impl HitRecord {

pub fn set_face_normal(&mut self, r: Ray, outward_normal: Vec3) {

// Sets the hit record normal vector.

// NOTE: the parameter `outward_normal` is assumed to have unit length.

let normal;

let front_face;

if dot(r.direction(), outward_normal) > 0.0 {

// ray is inside the sphere

normal = -outward_normal;

front_face = false;

} else {

// ray is outside the sphere

normal = outward_normal;

front_face = true;

}

self.front_face = front_face;

self.normal = normal;

}

}

pub trait Hittable {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord>;

}Listing 18: Remembering the side of the surface

We can set things up so that normals always point “outward” from the surface, or always point against the incident ray. This decision is determined by whether you want to determine the side of the surface at the time of geometry intersection or at the time of coloring. In this book we have more material types than we have geometry types, so we'll go for less work and put the determination at geometry time. This is simply a matter of preference, and you'll see both implementations in the literature.

We add the front_face bool to the hit_record class. We'll also add a function to solve this calculation for us: set_face_normal(). For convenience we will assume that the vector passed to the new set_face_normal() function is of unit length. We could always normalize the parameter explicitly, but it's more efficient if the geometry code does this, as it's usually easier when you know more about the specific geometry.

diff --git a/src/hittable.rs b/src/hittable.rs

index b8a3fcf..8ced826 100644

--- a/src/hittable.rs

+++ b/src/hittable.rs

@@ -1,15 +1,30 @@

use crate::{

ray::Ray,

- vec3::{Point3, Vec3},

+ vec3::{Point3, Vec3, dot},

};

#[derive(Debug, Default, Clone, Copy)]

pub struct HitRecord {

pub p: Point3,

pub normal: Vec3,

pub t: f64,

+ pub front_face: bool,

+}

+

+impl HitRecord {

+ pub fn set_face_normal(&mut self, r: Ray, outward_normal: Vec3) {

+ // Sets the hit record normal vector.

+ // NOTE: the parameter `outward_normal` is assumed to have unit length.

+

+ self.front_face = dot(r.direction(), outward_normal) < 0.0;

+ self.normal = if self.front_face {

+ outward_normal

+ } else {

+ -outward_normal

+ };

+ }

}

pub trait Hittable {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord>;

}Listing 19: [hittable.rs] Adding front-face tracking to hit_record

And then we add the surface side determination to the class:

diff --git a/src/sphere.rs b/src/sphere.rs

index aa651e9..86d3cbb 100644

--- a/src/sphere.rs

+++ b/src/sphere.rs

@@ -1,56 +1,57 @@

use crate::{

hittable::{HitRecord, Hittable},

ray::Ray,

vec3::{Point3, dot},

};

#[derive(Debug, Clone, Copy)]

pub struct Sphere {

center: Point3,

radius: f64,

}

impl Sphere {

pub fn new(center: Point3, radius: f64) -> Self {

Self {

center,

radius: f64::max(0.0, radius),

}

}

}

impl Hittable for Sphere {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord> {

let oc = self.center - r.origin();

let a = r.direction().length_squared();

let h = dot(r.direction(), oc);

let c = oc.length_squared() - self.radius * self.radius;

let discriminant = h * h - a * c;

if discriminant < 0.0 {

return None;

}

let sqrtd = f64::sqrt(discriminant);

// Find the nearest root that lies in the acceptable range.

let mut root = (h - sqrtd) / a;

if root <= ray_tmin || ray_tmax <= root {

root = (h + sqrtd) / a;

if root <= ray_tmin || ray_tmax <= root {

return None;

}

}

let t = root;

let p = r.at(t);

- let rec = HitRecord {

+ let mut rec = HitRecord {

t,

p,

- normal: (p - self.center) / self.radius,

..Default::default()

};

+ let outward_normal = (p - self.center) / self.radius;

+ rec.set_face_normal(r, outward_normal);

Some(rec)

}

}Listing 20: [sphere.rs] The sphere class with normal determination

A List of Hittable Objects

We have a generic object called a hittable that the ray can intersect with. We now add a class that stores a list of hittables:

use std::rc::Rc;

use crate::{

hittable::{HitRecord, Hittable},

ray::Ray,

};

#[derive(Default)]

pub struct HittableList {

pub objects: Vec<Rc<dyn Hittable>>,

}

impl HittableList {

pub fn new() -> Self {

Self::default()

}

pub fn clear(&mut self) {

self.objects.clear();

}

pub fn add(&mut self, object: Rc<dyn Hittable>) {

self.objects.push(object);

}

}

impl Hittable for HittableList {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord> {

self.objects

.iter()

.filter_map(|obj| obj.hit(r, ray_tmin, ray_tmax))

.min_by(|a, b| a.t.partial_cmp(&b.t).expect("no NaN value"))

}

}Listing 21: [hittable_list.rs] The hittable_list class

Some New C++ Features 1

The hittable_list class code uses some C++ features that may trip you up if you're not normally a C++ programmer: vector, shared_ptr, and make_shared. 2

shared_ptr<type> is a pointer to some allocated type, with reference-counting semantics. Every time you assign its value to another shared pointer (usually with a simple assignment), the reference count is incremented. As shared pointers go out of scope (like at the end of a block or function), the reference count is decremented. Once the count goes to zero, the object is safely deleted. 3

Typically, a shared pointer is first initialized with a newly-allocated object, something like this:

#![allow(unused)] fn main() { let double_ptr: Rc<double> = Rc::new(0.37); let vec3_ptr: Rc<Vec3> = Rc::new(Vec3::new(1.414214, 2.718281, 1.618034)); let sphere_ptr: Rc<Sphere> = Rc::new(Sphere::new(Point3::new(0.0, 0.0, 0.0), 1.0)); }

Listing 22: An example allocation using shared_ptr

make_shared<thing>(thing_constructor_params ...) allocates a new instance of type thing, using the constructor parameters. It returns a shared_ptr<thing>. 4

Since the type can be automatically deduced by the return type of make_shared<type>(...), the above lines can be more simply expressed using C++'s auto type specifier: 5

#![allow(unused)] fn main() { let double_ptr = Rc::new(0.37); let vec3_ptr = Rc::new(Vec3::new(1.414214, 2.718281, 1.618034)); let sphere_ptr = Rc::new(Sphere::new(Point3::new(0.0, 0.0, 0.0), 1.0)); }

Listing 23: An example allocation using shared_ptr with auto type

We'll use shared pointers in our code, because it allows multiple geometries to share a common instance (for example, a bunch of spheres that all use the same color material), and because it makes memory management automatic and easier to reason about.

std::shared_ptr is included with the <memory> header.6

The second C++ feature you may be unfamiliar with is std::vector. This is a generic array-like collection of an arbitrary type. Above, we use a collection of pointers to hittable. std::vector automatically grows as more values are added: objects.push_back(object) adds a value to the end of the std::vector member variable objects.

std::vector is included with the ``

Finally, the using statements in listing 21 tell the compiler that we'll be getting shared_ptr and make_shared from the std library, so we don't need to prefix these with std:: every time we reference them.

-

This chapter can be safely skipped when the code of last chapter is clear. ↩

-

The Rust equivalents are

Vec,Rcand a simplenewmethod of the reference counting smart pointer. ↩ -

Here we use

Rc. In contrast to the C++shared_ptr, the contained value of anRcis inmutable. Enclosing the value with a cell based type like for exampleRefCellwould allow for interior mutability. However in this case, all objects are created on startup and do not change over the course of the program lifetime which is why a simpleRcis sufficient. ↩ -

Rust has no constructors, instead it is convention to fill structs with the

newmethod, the implementation of traits likeDefault,Fromor similar, or to use any method that returnsSelf. So technically something likemake_shareddoes not exist in Rust. ↩ -

The type annotations in the last listing were not nessecary, Rust's

letdeclarations can in this case infere the types. ↩ -

Rcis found instd::rc::Rc. ↩ -

Vecis included in Rust's std prelude and does have to be included to be used. ↩

Common Constants and Utility Functions

We need some math constants that we conveniently define in their own header file. For now we only need infinity, but we will also throw our own definition of pi in there, which we will need later. We'll also throw common useful constants and future utility functions in here. This new header, rtweekend.h, will be our general main header file. 1

pub use log::*;

// Rust Std usings

pub use std::rc::Rc;

// Constants

pub const INFINITY: f64 = f64::INFINITY;

pub const PI: f64 = std::f64::consts::PI;

// Common Headers

pub use crate::{color::*, ray::*, vec3::*};Listing 24: [prelude.rs] The rtweekend.h common header

Program files will include rtweekend.h first, so all other header files (where the bulk of our code will reside) can implicitly assume that rtweekend.h has already been included. Header files still need to explicitly include any other necessary header files. We'll make some updates with these assumptions in mind.

// nothing changesListing 25: [color.rs] Assume rtweekend.h inclusion for color.h 2

diff --git a/src/hittable.rs b/src/hittable.rs

index 8ced826..a7aab5a 100644

--- a/src/hittable.rs

+++ b/src/hittable.rs

@@ -1,30 +1,27 @@

-use crate::{

- ray::Ray,

- vec3::{Point3, Vec3, dot},

-};

+use crate::prelude::*;

#[derive(Debug, Default, Clone, Copy)]

pub struct HitRecord {

pub p: Point3,

pub normal: Vec3,

pub t: f64,

pub front_face: bool,

}

impl HitRecord {

pub fn set_face_normal(&mut self, r: Ray, outward_normal: Vec3) {

// Sets the hit record normal vector.

// NOTE: the parameter `outward_normal` is assumed to have unit length.

self.front_face = dot(r.direction(), outward_normal) < 0.0;

self.normal = if self.front_face {

outward_normal

} else {

-outward_normal

};

}

}

pub trait Hittable {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord>;

}Listing 26: [hittable.rs] Assume rtweekend.h inclusion for hittable.h

diff --git a/src/hittable_list.rs b/src/hittable_list.rs

index 95c24e4..7841161 100644

--- a/src/hittable_list.rs

+++ b/src/hittable_list.rs

@@ -1,34 +1,32 @@

-use std::rc::Rc;

-

use crate::{

hittable::{HitRecord, Hittable},

- ray::Ray,

+ prelude::*,

};

#[derive(Default)]

pub struct HittableList {

pub objects: Vec<Rc<dyn Hittable>>,

}

impl HittableList {

pub fn new() -> Self {

Self::default()

}

pub fn clear(&mut self) {

self.objects.clear();

}

pub fn add(&mut self, object: Rc<dyn Hittable>) {

self.objects.push(object);

}

}

impl Hittable for HittableList {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord> {

self.objects

.iter()

.filter_map(|obj| obj.hit(r, ray_tmin, ray_tmax))

.min_by(|a, b| a.t.partial_cmp(&b.t).expect("no NaN value"))

}

}Listing 27: [hittable_list.rs] Assume rtweekend.h inclusion for hittable_list.h

diff --git a/src/sphere.rs b/src/sphere.rs

index 86d3cbb..9de9f72 100644

--- a/src/sphere.rs

+++ b/src/sphere.rs

@@ -1,57 +1,56 @@

use crate::{

hittable::{HitRecord, Hittable},

- ray::Ray,

- vec3::{Point3, dot},

+ prelude::*,

};

#[derive(Debug, Clone, Copy)]

pub struct Sphere {

center: Point3,

radius: f64,

}

impl Sphere {

pub fn new(center: Point3, radius: f64) -> Self {

Self {

center,

radius: f64::max(0.0, radius),

}

}

}

impl Hittable for Sphere {

fn hit(&self, r: Ray, ray_tmin: f64, ray_tmax: f64) -> Option<HitRecord> {

let oc = self.center - r.origin();

let a = r.direction().length_squared();

let h = dot(r.direction(), oc);

let c = oc.length_squared() - self.radius * self.radius;

let discriminant = h * h - a * c;

if discriminant < 0.0 {

return None;

}

let sqrtd = f64::sqrt(discriminant);

// Find the nearest root that lies in the acceptable range.

let mut root = (h - sqrtd) / a;

if root <= ray_tmin || ray_tmax <= root {

root = (h + sqrtd) / a;

if root <= ray_tmin || ray_tmax <= root {

return None;

}

}

let t = root;

let p = r.at(t);

let mut rec = HitRecord {

t,

p,

..Default::default()

};

let outward_normal = (p - self.center) / self.radius;

rec.set_face_normal(r, outward_normal);

Some(rec)

}

}Listing 28: [sphere.rs] Assume rtweekend.h inclusion for sphere.h

// nothing changesListing 29: [vec3.rs] Assume rtweekend.h inclusion for vec3.h

And now the new main:

diff --git a/src/main.rs b/src/main.rs

index 1f26e3d..59c000b 100644

--- a/src/main.rs

+++ b/src/main.rs

@@ -1,82 +1,74 @@

-use code::{

- color::{Color, write_color},

- ray::Ray,

- vec3::{Point3, Vec3, dot, unit_vector},

-};

-

-fn hit_sphere(center: Point3, radius: f64, r: Ray) -> Option<f64> {

- let oc = center - r.origin();

- let a = r.direction().length_squared();

- let h = dot(r.direction(), oc);

- let c = oc.length_squared() - radius * radius;

- let discriminant = h * h - a * c;

-

- (discriminant >= 0.0).then(|| (h - f64::sqrt(discriminant)) / a)

-}

+use code::{hittable::Hittable, hittable_list::HittableList, prelude::*, sphere::Sphere};

-fn ray_color(r: Ray) -> Color {

- if let Some(t) = hit_sphere(Point3::new(0.0, 0.0, -1.0), 0.5, r) {

- let n = unit_vector(r.at(t) - Vec3::new(0.0, 0.0, -1.0));

- return 0.5 * Color::new(n.x() + 1.0, n.y() + 1.0, n.z() + 1.0);

+fn ray_color(r: Ray, world: &impl Hittable) -> Color {

+ if let Some(rec) = world.hit(r, 0.0, INFINITY) {

+ return 0.5 * (rec.normal + Color::new(1.0, 1.0, 1.0));

}

let unit_direction = unit_vector(r.direction());

let a = 0.5 * (unit_direction.y() + 1.0);

(1.0 - a) * Color::new(1.0, 1.0, 1.0) + a * Color::new(0.5, 0.7, 1.0)

}

fn main() -> Result<(), Box<dyn std::error::Error>> {

// Image

const ASPECT_RATIO: f64 = 16.0 / 9.0;

const IMAGE_WIDTH: i32 = 400;

// Calculate the image height, and ensure that it's at least 1.

const IMAGE_HEIGHT: i32 = {

let image_height = (IMAGE_WIDTH as f64 / ASPECT_RATIO) as i32;

if image_height < 1 { 1 } else { image_height }

};

+ // World

+

+ let mut world = HittableList::new();

+

+ world.add(Rc::new(Sphere::new(Point3::new(0.0, 0.0, -1.0), 0.5)));

+ world.add(Rc::new(Sphere::new(Point3::new(0.0, -100.5, -1.0), 100.0)));

+

// Camera

let focal_length = 1.0;

let viewport_height = 2.0;

let viewport_width = viewport_height * (IMAGE_WIDTH as f64) / (IMAGE_HEIGHT as f64);

let camera_center = Point3::new(0.0, 0.0, 0.0);

// Calculate the vectors across the horizontal and down the vertical viewport edges.

let viewport_u = Vec3::new(viewport_width, 0.0, 0.0);

let viewport_v = Vec3::new(0.0, -viewport_height, 0.0);

// Calculate the horizontal and vertical delta vectors from pixel to pixel.

let pixel_delta_u = viewport_u / IMAGE_WIDTH as f64;

let pixel_delta_v = viewport_v / IMAGE_HEIGHT as f64;

// Calculate the location of the upper left pixel.

let viewport_upper_left =

camera_center - Vec3::new(0.0, 0.0, focal_length) - viewport_u / 2.0 - viewport_v / 2.0;

let pixel00_loc = viewport_upper_left + 0.5 * (pixel_delta_u + pixel_delta_v);

// Render

env_logger::init();

println!("P3");

println!("{IMAGE_WIDTH} {IMAGE_HEIGHT}");

println!("255");

for j in 0..IMAGE_HEIGHT {

- log::info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

+ info!("Scanlines remaining: {}", IMAGE_HEIGHT - j);

for i in 0..IMAGE_WIDTH {

let pixel_center =

pixel00_loc + (i as f64) * pixel_delta_u + (j as f64) * pixel_delta_v;

let ray_direction = pixel_center - camera_center;

let r = Ray::new(camera_center, ray_direction);

- let pixel_color = ray_color(r);

+ let pixel_color = ray_color(r, &world);

write_color(std::io::stdout(), pixel_color)?;

}

}

- log::info!("Done.");

+ info!("Done.");

Ok(())

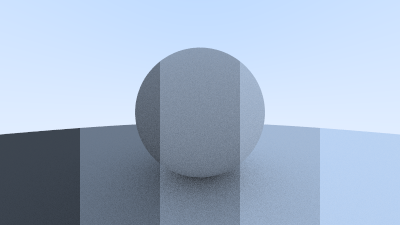

}Listing 30: [main.rs] The new main with hittables

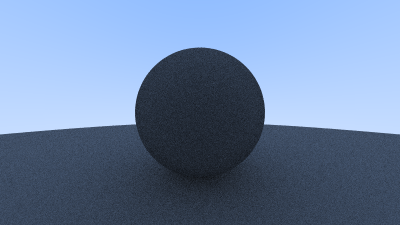

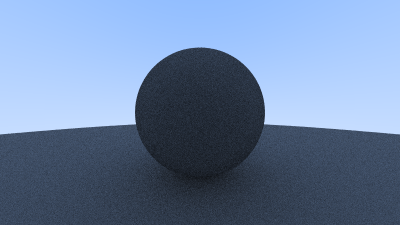

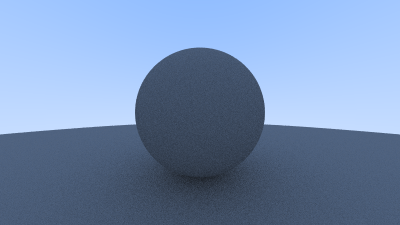

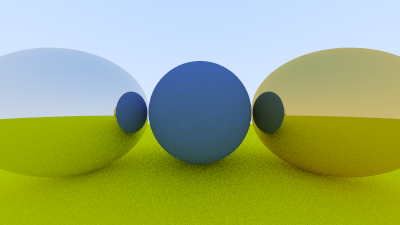

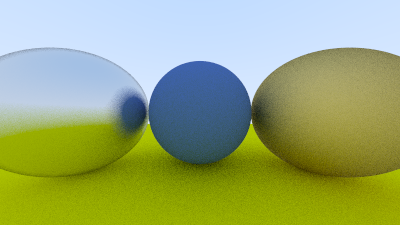

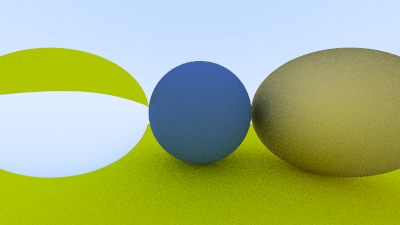

This yields a picture that is really just a visualization of where the spheres are located along with their surface normal. This is often a great way to view any flaws or specific characteristics of a geometric model.

Image 5: Resulting render of normals-colored sphere with ground

-

In Rust it is common to create a prelude for common types, which we will do here instead. Note however, that there are at the momentan no plan to include a custom prelude as a language feature, instead we need to import the prelude with

use crate::prelude::*. ↩ -

There is no need to use the prelude in

color.rsonly for theVec3struct. The listing is still included to match the numbering of the original book series. ↩

An Interval Class

Before we continue, we'll implement an interval class to manage real-valued intervals with a minimum and a maximum. We'll end up using this class quite often as we proceed.

#[derive(Debug, Clone, Copy)]

pub struct Interval {

pub min: f64,

pub max: f64,

}

impl Default for Interval {

fn default() -> Self {

Self::EMPTY

}

}

impl Interval {

pub const EMPTY: Self = Self {

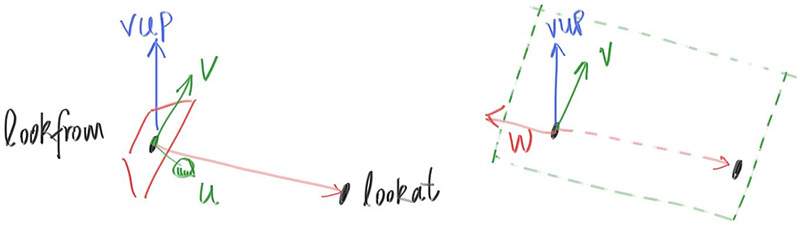

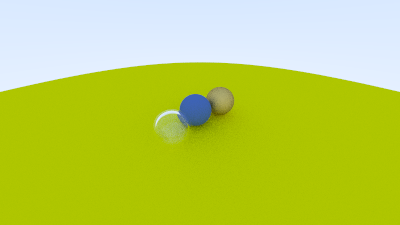

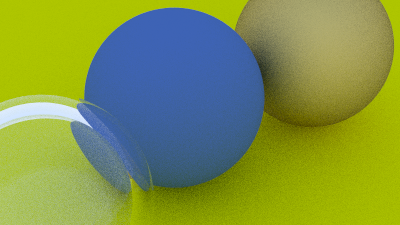

min: f64::INFINITY,