True Lambertian Reflection

Scattering reflected rays evenly about the hemisphere produces a nice soft diffuse model, but we can definitely do better. A more accurate representation of real diffuse objects is the Lambertian distribution. This distribution scatters reflected rays in a manner that is proportional to \( cos(\phi) \), where \( \phi \) is the angle between the reflected ray and the surface normal. This means that a reflected ray is most likely to scatter in a direction near the surface normal, and less likely to scatter in directions away from the normal. This non-uniform Lambertian distribution does a better job of modeling material reflection in the real world than our previous uniform scattering.

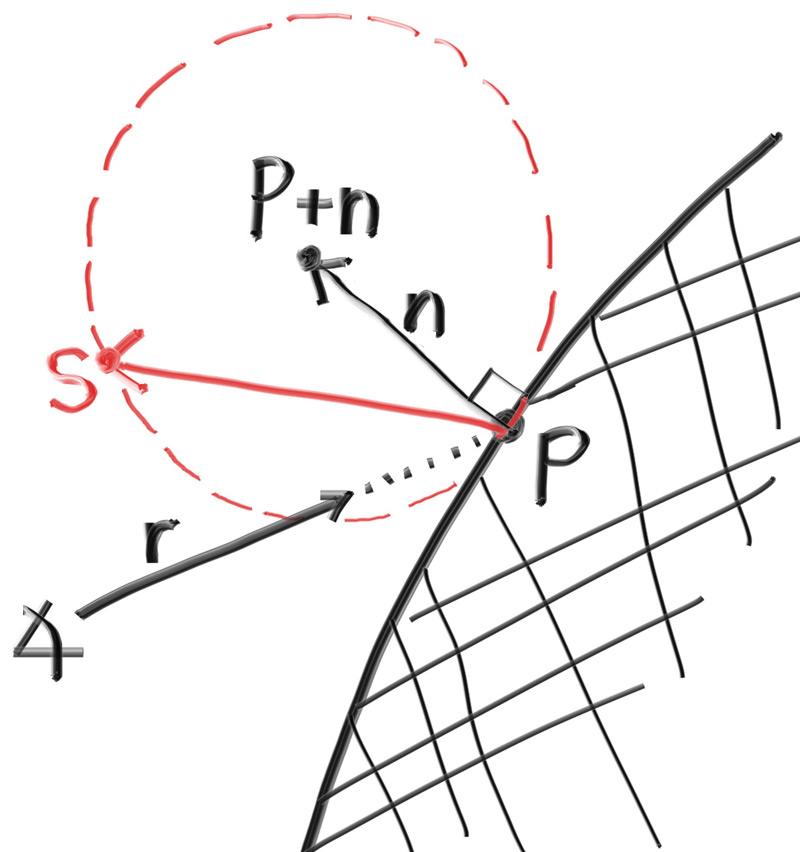

We can create this distribution by adding a random unit vector to the normal vector. At the point of intersection on a surface there is the hit point, \( \mathbf{p} \), and there is the normal of the surface, \( \mathbf{n} \). At the point of intersection, this surface has exactly two sides, so there can only be two unique unit spheres tangent to any intersection point (one unique sphere for each side of the surface). These two unit spheres will be displaced from the surface by the length of their radius, which is exactly one for a unit sphere.

One sphere will be displaced in the direction of the surface's normal (\( \mathbf{n} \)) and one sphere will be displaced in the opposite direction (\( -\mathbf{n} \)). This leaves us with two spheres of unit size that will only be just touching the surface at the intersection point. From this, one of the spheres will have its center at \( (\mathbf{P} + \mathbf{n}) \) and the other sphere will have its center at \( (\mathbf{P} - \mathbf{n}) \). The sphere with a center at \( (\mathbf{P} - \mathbf{n}) \) is considered inside the surface, whereas the sphere with center \( (\mathbf{P} + \mathbf{n}) \) is considered outside the surface.

We want to select the tangent unit sphere that is on the same side of the surface as the ray origin. Pick a random point \( \mathbf{S} \) on this unit radius sphere and send a ray from the hit point \( \mathbf{P} \) to the random point \( \mathbf{S} \) (this is the vector \( (\mathbf{S} - \mathbf{P}) \):

Figure 14: Randomly generating a vector according to Lambertian distribution

The change is actually fairly minimal:

diff --git a/src/camera.rs b/src/camera.rs

index eea2b1f..3a2e772 100644

--- a/src/camera.rs

+++ b/src/camera.rs

@@ -1,166 +1,166 @@

use crate::{hittable::Hittable, prelude::*};

pub struct Camera {

/// Ratio of image width over height

pub aspect_ratio: f64,

/// Rendered image width in pixel count

pub image_width: i32,

// Count of random samples for each pixel

pub samples_per_pixel: i32,

// Maximum number of ray bounces into scene

pub max_depth: i32,

/// Rendered image height

image_height: i32,

// Color scale factor for a sum of pixel samples

pixel_samples_scale: f64,

/// Camera center

center: Point3,

/// Location of pixel 0, 0

pixel00_loc: Point3,

/// Offset to pixel to the right

pixel_delta_u: Vec3,

/// Offset to pixel below

pixel_delta_v: Vec3,

}

impl Default for Camera {

fn default() -> Self {

Self {

aspect_ratio: 1.0,

image_width: 100,

samples_per_pixel: 10,

max_depth: 10,

image_height: Default::default(),

pixel_samples_scale: Default::default(),

center: Default::default(),

pixel00_loc: Default::default(),

pixel_delta_u: Default::default(),

pixel_delta_v: Default::default(),

}

}

}

impl Camera {

pub fn with_aspect_ratio(mut self, aspect_ratio: f64) -> Self {

self.aspect_ratio = aspect_ratio;

self

}

pub fn with_image_width(mut self, image_width: i32) -> Self {

self.image_width = image_width;

self

}

pub fn with_samples_per_pixel(mut self, samples_per_pixel: i32) -> Self {

self.samples_per_pixel = samples_per_pixel;

self

}

pub fn with_max_depth(mut self, max_depth: i32) -> Self {

self.max_depth = max_depth;

self

}

pub fn render(&mut self, world: &impl Hittable) -> std::io::Result<()> {

self.initialize();

println!("P3");

println!("{} {}", self.image_width, self.image_height);

println!("255");

for j in 0..self.image_height {

info!("Scanlines remaining: {}", self.image_height - j);

for i in 0..self.image_width {

let mut pixel_color = Color::new(0.0, 0.0, 0.0);

for _sample in 0..self.samples_per_pixel {

let r = self.get_ray(i, j);

pixel_color += Self::ray_color(r, self.max_depth, world);

}

write_color(std::io::stdout(), self.pixel_samples_scale * pixel_color)?;

}

}

info!("Done.");

Ok(())

}

fn initialize(&mut self) {

self.image_height = {

let image_height = (self.image_width as f64 / self.aspect_ratio) as i32;

if image_height < 1 { 1 } else { image_height }

};

self.pixel_samples_scale = 1.0 / self.samples_per_pixel as f64;

self.center = Point3::new(0.0, 0.0, 0.0);

// Determine viewport dimensions.

let focal_length = 1.0;

let viewport_height = 2.0;

let viewport_width =

viewport_height * (self.image_width as f64) / (self.image_height as f64);

// Calculate the vectors across the horizontal and down the vertical viewport edges.

let viewport_u = Vec3::new(viewport_width, 0.0, 0.0);

let viewport_v = Vec3::new(0.0, -viewport_height, 0.0);

// Calculate the horizontal and vertical delta vectors from pixel to pixel.

self.pixel_delta_u = viewport_u / self.image_width as f64;

self.pixel_delta_v = viewport_v / self.image_height as f64;

// Calculate the location of the upper left pixel.

let viewport_upper_left =

self.center - Vec3::new(0.0, 0.0, focal_length) - viewport_u / 2.0 - viewport_v / 2.0;

self.pixel00_loc = viewport_upper_left + 0.5 * (self.pixel_delta_u + self.pixel_delta_v);

}

fn get_ray(&self, i: i32, j: i32) -> Ray {

// Construct a camera ray originating from the origin and directed at randomly sampled

// point around the pixel location i, j.

let offset = Self::sample_square();

let pixel_sample = self.pixel00_loc

+ ((i as f64 + offset.x()) * self.pixel_delta_u)

+ ((j as f64 + offset.y()) * self.pixel_delta_v);

let ray_origin = self.center;

let ray_direction = pixel_sample - ray_origin;

Ray::new(ray_origin, ray_direction)

}

fn sample_square() -> Vec3 {

// Returns the vector to a random point in the [-.5,-.5]-[+.5,+.5] unit square.

Vec3::new(

rand::random::<f64>() - 0.5,

rand::random::<f64>() - 0.5,

0.0,

)

}

fn _sample_disk(radius: f64) -> Vec3 {

// Returns a random point in the unit (radius 0.5) disk centered at the origin.

radius * random_in_unit_disk()

}

fn ray_color(r: Ray, depth: i32, world: &impl Hittable) -> Color {

// If we've exceeded the ray bounce limit, no more light is gathered.

if depth <= 0 {

return Color::new(0.0, 0.0, 0.0);

}

if let Some(rec) = world.hit(r, Interval::new(0.001, INFINITY)) {

- let direction = random_on_hemisphere(rec.normal);

+ let direction = rec.normal + random_unit_vector();

return 0.5 * Self::ray_color(Ray::new(rec.p, direction), depth - 1, world);

}

let unit_direction = unit_vector(r.direction());

let a = 0.5 * (unit_direction.y() + 1.0);

(1.0 - a) * Color::new(1.0, 1.0, 1.0) + a * Color::new(0.5, 0.7, 1.0)

}

}Listing 55: [camera.rs] ray_color() with replacement diffuse

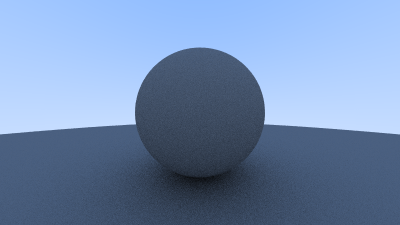

After rendering we get a similar image:

Image 10: Correct rendering of Lambertian spheres

It's hard to tell the difference between these two diffuse methods, given that our scene of two spheres is so simple, but you should be able to notice two important visual differences:

- The shadows are more pronounced after the change

- Both spheres are tinted blue from the sky after the change

Both of these changes are due to the less uniform scattering of the light rays—more rays are scattering toward the normal. This means that for diffuse objects, they will appear darker because less light bounces toward the camera. For the shadows, more light bounces straight-up, so the area underneath the sphere is darker.

Not a lot of common, everyday objects are perfectly diffuse, so our visual intuition of how these objects behave under light can be poorly formed. As scenes become more complicated over the course of the book, you are encouraged to switch between the different diffuse renderers presented here. Most scenes of interest will contain a large amount of diffuse materials. You can gain valuable insight by understanding the effect of different diffuse methods on the lighting of a scene.